Introduction

This is the fifth installment on a series of blog posts related to deploying OpenShift in multi-cluster configurations.

In the first two posts (part 1 and part 2), we explored how to create a network tunnel between multiple clusters.

In the third post, it was demonstrated how to deploy Istio multicluster across multiple clusters and how to deploy the popular bookinfo application in this multiple cluster-spanning mesh.

In the fourth installment, we showed further improvement by adding Federation V2 to the mix to help propagate federated resources across multiple clusters.

In each of the previous implementations, we ignored how to channel traffic between each of the federated clusters.

In a previously published article, it was described how to build a global load balancer to balance traffic across multiple OpenShift clusters.

In this post, we will implement that architecture, building upon the concepts from the last four posts. We will also see how it is possible to host the global load balancer in the federated clusters themselves, achieving a self-hosted global load balancer.

External DNS

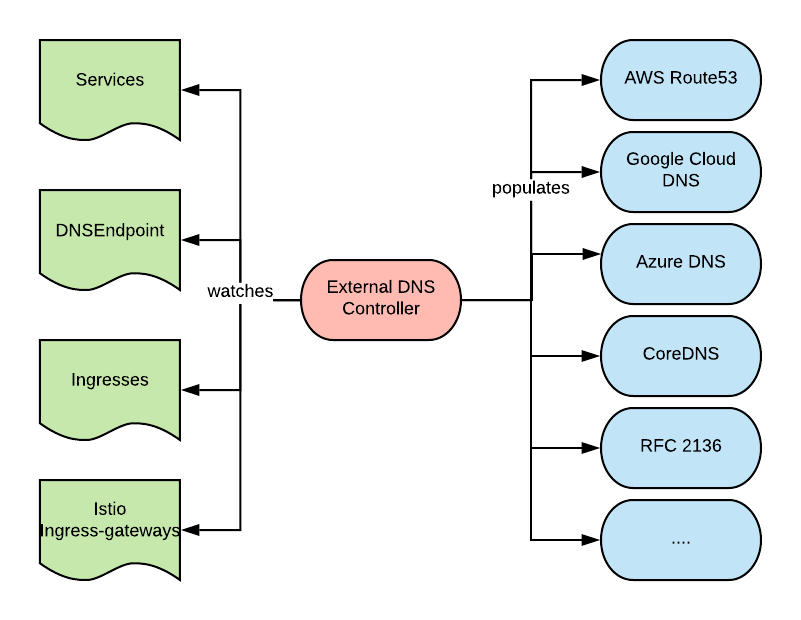

External-DNS is an open source project in the Kubernetes ecosystem that aims at automating DNS configurations based on the status of Kubernetes resources.

External-DNS can observe LoadBalancer services, Ingresses, as well as Custom Resources (CR) and deduce appropriate DNS records. External-DNS will then feed those records to a configured DNS server. The architecture is depicted below:

External-DNS supports a number of out-of-the-box DNS server implementations (the current list is here), but also has a plugin system which allows for a relatively easy introduction of custom DNS server implementations.

External-DNS supports a number of out-of-the-box DNS server implementations (the current list is here), but also has a plugin system which allows for a relatively easy introduction of custom DNS server implementations.

When creating a global load balancer with the intention of load balancing across multiple OCP clusters, we need to be able to create DNS records based on the status of those clusters. The External-DNS controller watches only one cluster, so it cannot directly be used to feed a global load balancer.

Integrating Federation V2 and External-DNS

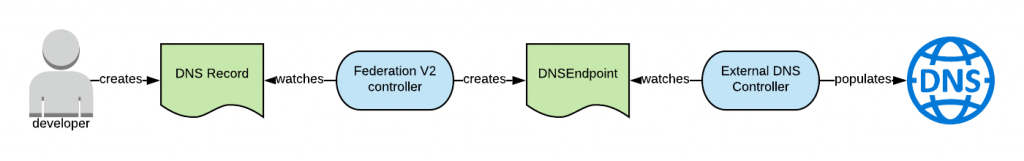

To feed a global load balancer with data from External-DNS, we need to integrate it with Federation V2. The diagram below shows the architecture of this integration:

A developer declared their intention to create a name for a set of endpoints by creating a DNSRecord CR. There are two types of DNSRecord CRs, the ServiceDNSRecond and the IngressDNSRecord. They respectively represent the intention to obtain and register an FQDN for a set of services (here only LoadBalancer service would be appropriate) or a set of Ingresses.

The federation controller control plane observes this resource and creates a DNSEndpoint CR. This CR contains the association between the desired Fully Qualified Domain Name (FQDN) and the actual IPs which can be either the set of services or the set of ingresses that we currently have in the federated clusters.

The External-DNS controller watches the DNSEndpoint resources and based on the information that is contained within, it populates the configured DNS server.

In this architecture, the global load balancer could be anything supported by External-DNS. If running in the cloud, one would probably want to use the DNS solution provided by the cloud provider (Route 53 if AWS, Cloud DNS if Google Cloud, etc..). On premise, different implementations can be used; notably the DNS Dynamic update protocol (RFC2136), which is supported by External-DNS and should allow integration with the major DNS products.

A global load balancer should be highly available and also capable of transparently recovering from disasters. Therefore, it’s a good practice to build global DNS solutions where there are several DNS servers clustered together and spread across multiple regions (this is what cloud providers do behind the scenes).

If one is building a federation of OpenShift clusters across geographies, then one already has the ability to spread workloads across multiple geographic locations. In this case, it may make sense to deploy the global load balancer in the clusters it will be responsible for, i.e. to self-host the global load balancer.

A Self-Hosted Global Load Balancer

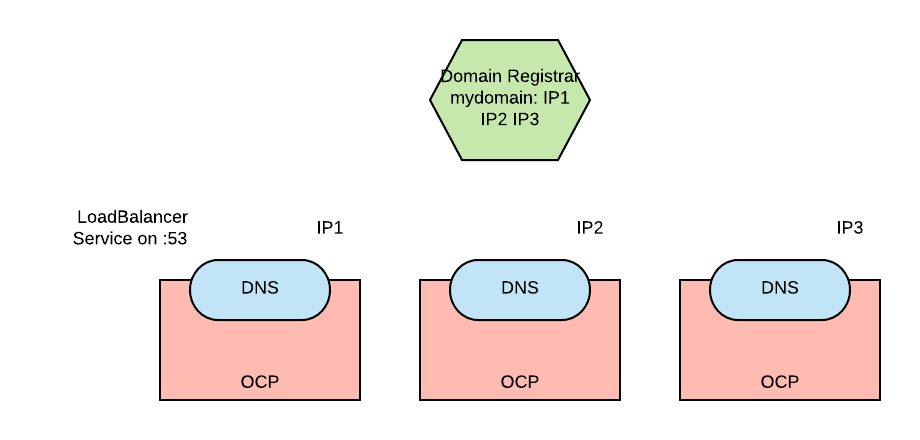

In a federation of three OpenShift clusters, a self-hosted global load balancer would be depicted as follows:

DNS servers are deployed on each of the clusters and exposed externally with a LoadBalancer service type on port UDP/53. The LoadBalancer service type will acquire an external IP (discoverable in the status section of the Service resource) when created. A record associating these IPs to our domain must be created with the domain name registrar (where the domain was purchased or where it is managed). This action allows DNS queries against the domain to be forwarded to one of our DNS servers.

CoreDNS is a DNS server implementation that is relatively easy to deploy in OpenShift, and it is well integrated with External-DNS. For these reasons it is well suited to implement our self-hosted global load balancer.

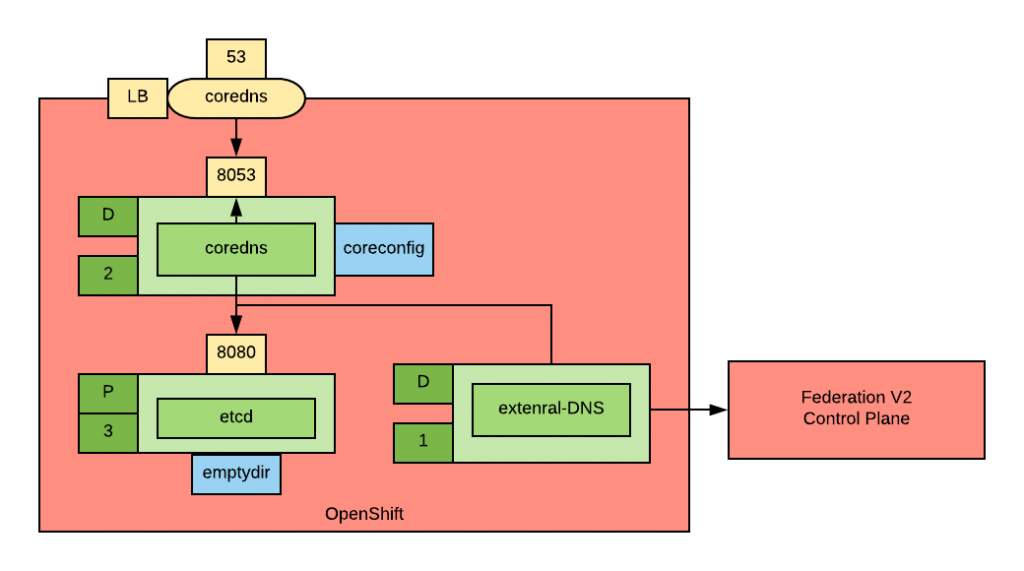

Each of the clusters will have the following deployment (illustrated below in KDL notation):

In this diagram, two (for HA) CoreDNS pods are configured to use etcd as their DNS record source. The etcd cluster has three instances (to maintain quorum) and is populated by External-DNS.

Notice that External-DNS is connected to the master API of the cluster that houses the Federation V2 control plane. This may or may not be the same cluster.

Installation

An Ansible playbook to install the solution discussed above is available here.

With an Ansible inventory similar (or even the same) to the one used for the previous articles of this series, you can run the above playbook. Instructions for testing the self-hosted global load balancer are also provided.

Note: this solution is a personal effort and it’s not currently supported by Red Hat.

Load Balancing Strategies

CoreDNS can implement a round robin load balancing strategy if the loadbalance plugin is configured.

Currently, the loadbalance plugin only supports round robin as a load balancing policy,

With this policy the order of the returned IPs is randomized.

This approach has some limitations:

- Round robin load balancing does not ensure an efficient load balancing because there is no guarantee that the hierarchy of DNS servers will preserve the order of the retuned IPs nor that the client will always use the first of the returned IPs.

- If a back end is not available, its IP will continue to appear in the returned list.

Although the second limitation is mitigated by the fact that Federation-V2 will remove an IP of a service that has no underlying pod behind it from a DNSEndpoint CR standpoint, load balancing is not always the preferred load balancing method.

Another very common mechanism of achieving load balancing is geo loadbalancing. With this policy, the DNS solution returns the endpoint that is closer to the IP of the caller (where the distance is defined with some network metrics)

Geo load balancing and other load balancing policies are not currently available with the CoreDNS-based solution described in this article. Given that CoreDNS is project based on a plugin architecture, it’s conceivable that these features will be added in the future as plugins.

Conclusions

In this post, we have demonstrated how to utilize and load balance traffic to an application that is deployed on multiple federated clusters. In addition, we have illustrated how it is conceptually possible to self-host the global load balancer in the same clusters that it is managing. While the technology is not yet ready for prime time, I hope that the described architecture will spark new ideas and foster new contributions so that these types of solutions can be used to improve how geographically distributed architectures can be built using OpenShift.

About the author

Raffaele is a full-stack enterprise architect with 20+ years of experience. Raffaele started his career in Italy as a Java Architect then gradually moved to Integration Architect and then Enterprise Architect. Later he moved to the United States to eventually become an OpenShift Architect for Red Hat consulting services, acquiring, in the process, knowledge of the infrastructure side of IT.

Currently Raffaele covers a consulting position of cross-portfolio application architect with a focus on OpenShift. Most of his career Raffaele worked with large financial institutions allowing him to acquire an understanding of enterprise processes and security and compliance requirements of large enterprise customers.

Raffaele has become part of the CNCF TAG Storage and contributed to the Cloud Native Disaster Recovery whitepaper.

Recently Raffaele has been focusing on how to improve the developer experience by implementing internal development platforms (IDP).

Browse by channel

Automation

The latest on IT automation that spans tech, teams, and environments

Artificial intelligence

Explore the platforms and partners building a faster path for AI

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

Explore how we reduce risks across environments and technologies

Edge computing

Updates on the solutions that simplify infrastructure at the edge

Infrastructure

Stay up to date on the world’s leading enterprise Linux platform

Applications

The latest on our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Developer resources

- Customer support

- Red Hat value calculator

- Red Hat Ecosystem Catalog

- Find a partner

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit