Red Hat blog

In this post we'll show you how you can run a MariaDB Galera Cluster on top of the OpenShift Container Platform. For making this possible we're using a feature called PetSets, which is currently in the Tech Preview status in OpenShift. For those who don't know it, MariaDB Galera Cluster is a multi-master solution for MariaDB/MySQL that provides an easy-to-use high-availability solution allowing zero-downtime maintenance and scalability.

In this blog post, engineers from Adfinis SyGroup present a solution with which you're able to operate a MariaDB Galera Cluster on OpenShift, Red Hat’s Enterprise-ready Kubernetes for Developers or any other Kubernetes-based compatible solutions. It allows cloud-native applications running in Kubernetes and their required databases to be run on the same infrastructure, using the same tools to manage them.

The functionality is based on a Kubernetes feature called PetSets(1), an API thought for stateful applications and services.

What are PetSets

PetSets is an API thought for running stateful applications and services on top of OpenShift/Kubernetes. It was introduced as an Alpha Feature in Kubernetes v1.3 and became available in OpenShift starting with v3.3. Because of the architecture of Kubernetes it was, up to now, not easy to run stateful services and applications on top of it, but first ideas for so called "nominal services" were proposed early on, but were not realized because of other priorities.

The addition of PetSets provides a solution that is tailored for the requirements of stateful applications and services. Pods in a PetSet receive a unique identity and numeric index (e.g. app-0, app-1, ...) which is consistent over the lifetime of the pod. Additionally, Persistent Volumes stay attached to the pod, even if the pod is migrated to another host. Because a Service needs to be assigned to each PetSet, it is possible to query this service to receive information about the pods in the PetSet. This makes it possible to e.g. automate the bootstrapping of a cluster, or change the configuration of the cluster during runtime, when the status of the cluster changes (scale up, scale down, maintenance or outage of a host, etc.).

How can MariaDB Galera Cluster be run on Kubernetes

To operate a MariaDB Galera Cluster on top of Kubernetes it is especially important to implement the bootstrap of the cluster and to construct the configuration based on the current pods in the PetSet. In our case those tasks are taken care of by two init containers. In the galera-init image the tools to perform the bootstrap are packaged, and a second container runs the peer-finder binary, which queries the SRV record of the assigned service and generates the configuration for the MariaDB Galera Cluster based on the current members of the PetSet.

When the first pet starts, wsrep_cluster_address=gcomm:// is used and the pod automatically bootstraps the cluster. Subsequently started pets add the hostnames they receive from the SRV record to wsrep_cluster_address and automatically join the cluster. Below is an example of what the configuration would look like after the start of the second pet.

wsrep_cluster_address=gcomm://mariadb-0.galera.lf2.svc.cluster.local,mariadb-1.galera.lf2.svc.cluster.local

wsrep_cluster_name=mariadb

wsrep_node_address=mariadb-1.galera.lf2.svc.cluster.local

Try for yourself

If you want to try running MariaDB Galera Cluster on your own OpenShift infrastructure you'll need to first clone our openshift-mariadb-galera repository on Github:

git clone https://github.com/adfinis-sygroup/openshift-mariadb-galera.git

Enter the cloned repository and you'll see all the the YAML definitions used for deploying MariaDB Galera Cluster on OpenShift. First you'll need to create the Persistent Volumes for storing the persistent data of Pods in the PetSet. To do this you'll need to run the following command as an OpenShift cluster admin:

oc create -f galera-pv-nfs.yml -n yourproject

After that you can enter your project and start the PetSet using:

oc create -f galera.yml

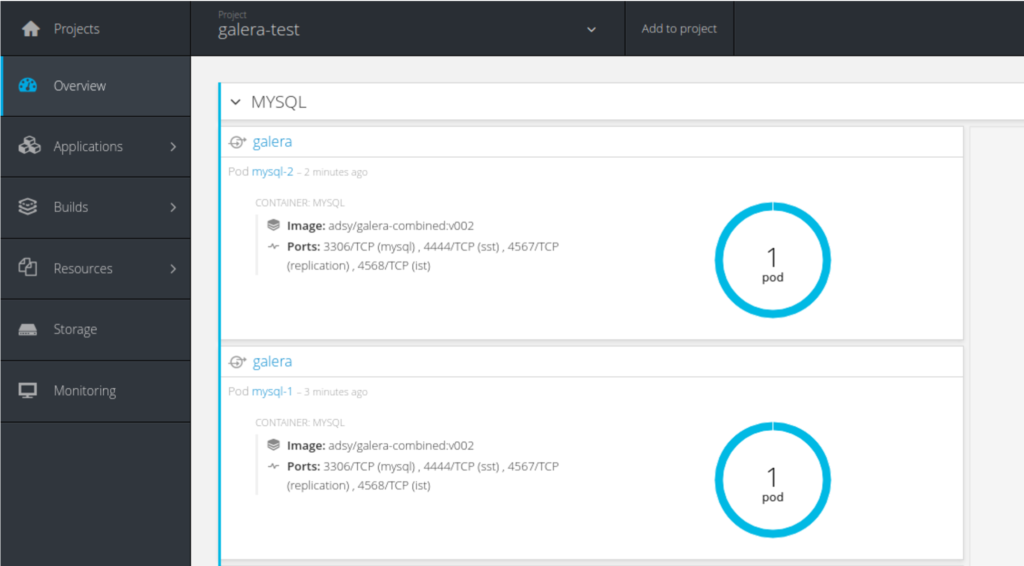

Now you should see pods with the names mysql-0, mysql-1 and mysql-2 appear in the WebUI, and when running oc get pod -w, one after another and in the logs of mysql-0 you'll see the other nodes join the cluster one at a time. Here's an excerpt from the logs when mysql-2 joined the cluster

2016-12-14 10:53:56 139884039759616 [Note] WSREP: Member 2.0 (mysql-2) requested state transfer from '*any*'. Selected 0.0 (mysql-0)(SYNCED) as donor.

2016-12-14 10:53:56 139884039759616 [Note] WSREP: Shifting SYNCED -> DONOR/DESYNCED (TO: 0)

2016-12-14 10:53:56 139883309950720 [Note] WSREP: Running: 'wsrep_sst_xtrabackup-v2 --role 'donor' --address 'mysql-2.galera.default.svc.cluster.local:4444/xtrabackup_sst//1' --socket '/var/lib/mysql/mysql.sock' --datadir '/var/lib/mysql/' --defaults-extra-file '/etc/mysql/my-galera.cnf' '' --gtid '6efedc62-c1eb-11e6-aa88-caa42e8a4bf5:0' --gtid-domain-id '0''

2016-12-14 10:53:56 139884364667648 [Note] WSREP: sst_donor_thread signaled with 0

2016-12-14 10:53:57 139884048152320 [Note] WSREP: (605ee840, 'tcp://0.0.0.0:4567') turning message relay requesting off

WSREP_SST: [INFO] Streaming with xbstream (20161214 10:53:57.285)

WSREP_SST: [INFO] Using socat as streamer (20161214 10:53:57.294)

WSREP_SST: [INFO] Using /tmp/tmp.VPV4JTPiMJ as xtrabackup temporary directory (20161214 10:53:57.328)

WSREP_SST: [INFO] Using /tmp/tmp.FaKIQIPmur as innobackupex temporary directory (20161214 10:53:57.333)

WSREP_SST: [INFO] Streaming GTID file before SST (20161214 10:53:57.343)

WSREP_SST: [INFO] Evaluating xbstream -c ${INFO_FILE} | socat -u stdio TCP:mysql-2.galera.default.svc.cluster.local:4444; RC=( ${PIPESTATUS[@]} ) (20161214 10:53:57.347)

WSREP_SST: [INFO] Sleeping before data transfer for SST (20161214 10:53:57.356)

WSREP_SST: [INFO] Streaming the backup to joiner at mysql-2.galera.default.svc.cluster.local 4444 (20161214 10:54:07.366)

WSREP_SST: [INFO] Evaluating innobackupex --defaults-extra-file=/etc/mysql/my-galera.cnf --no-version-check $tmpopts $INNOEXTRA --galera-info --stream=$sfmt $itmpdir 2>${DATA}/innobackup.backup.log | socat -u stdio TCP:mysql-2.galera.default.svc.cluster.local:4444; RC=( ${PIPESTATUS[@]} ) (20161214 10:54:07.374)

2016-12-14 10:54:11 139884039759616 [Note] WSREP: 0.0 (mysql-0): State transfer to 2.0 (mysql-2) complete.

2016-12-14 10:54:11 139884039759616 [Note] WSREP: Shifting DONOR/DESYNCED -> JOINED (TO: 0)

2016-12-14 10:54:11 139884039759616 [Note] WSREP: Member 0.0 (mysql-0) synced with group.

2016-12-14 10:54:11 139884039759616 [Note] WSREP: Shifting JOINED -> SYNCED (TO: 0)

2016-12-14 10:54:11 139884364667648 [Note] WSREP: Synchronized with group, ready for connections

WSREP_SST: [INFO] Total time on donor: 0 seconds (20161214 10:54:11.231)

WSREP_SST: [INFO] Cleaning up temporary directories (20161214 10:54:11.241)

2016-12-14 10:54:15 139884039759616 [Note] WSREP: 2.0 (mysql-2): State transfer from 0.0 (mysql-0) complete.

2016-12-14 10:54:15 139884039759616 [Note] WSREP: Member 2.0 (mysql-2) synced with group.

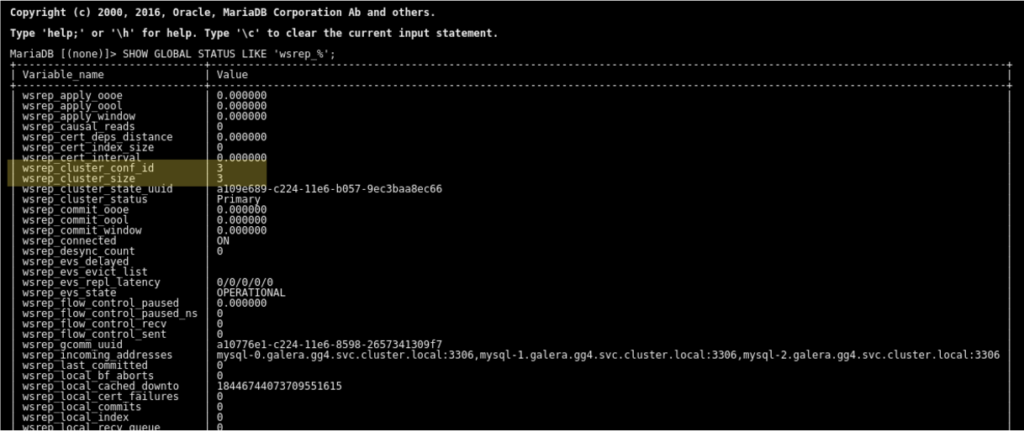

To test that the cluster was constructed successfully you can run the following command to show the Galera Cluster size

oc exec mysql-0 -- mysql -u root -e 'SHOW GLOBAL STATUS LIKE "wsrep_cluster_size";'

After all the pods have been started you should see the following output:

oc exec mysql-0 -- mysql -u root -e 'SHOW GLOBAL STATUS LIKE "wsrep_cluster_size";'

Variable_name Value

wsrep_cluster_size 3

Limitations of PetSet in Alpha

The full limitations of PetSets in the Alpha state are available in the Kubernetes documentation and concern multiple areas, but mostly tasks that currently require manual interaction. For example it's only possible to increase the number of replicas by updating the replicas parameter in the PetSet definition, but nothing else can be changed. That also means that an update of a PetSet by updating the PetSet definition is not possible, so to update to a new image version within the PetSet you'll need to create a new PetSet and join it with the old cluster.

What does the future hold for PetSets

With the release of Kubernetes v1.5 PetSets will leave the Alpha state and will be promoted to the Beta state under the new name StatefulSet. This is an important step, because the external API of a feature in the Beta state does not change. Kubernetes v1.5 was released on the 10th of December 2016, and the release of OpenShift Container Platform v3.5, which will be based on Kubernetes v1.5, is planned for Q3 2017.

About Adfinis SyGroup AG

Adfinis SyGroup is a leading Open Source service provider helping customers to implement best in class solutions based on Linux and related products. The crew is based in Switzerland and loves to play with bleeding edge technologies.

Links

- Kubernetes PetSet Documentation

- Kubernetes Init Container Documentation

- OpenShift Persistent Storage Documentation

- MariaDB Galera on OpenShift (Github)

- MariaDB Galera Cluster - What is MariaDB Galera Cluster?

- Persistent Volumes - OpenShift Persistent Storage Documentation

- PetSets - Kubernetes PetSet Documentation

- Pods - OpenShift Pod Documentation

- Service - OpenShift Services Documentation