Red Hat blog

Working with OpenShift Online

I work at Red Hat, helping customers evaluate Kubernetes and OpenShift through proofs-of-concept and workshops. Independently, I am also part of a startup providing mobile game analysis services for game developers. Those two activities have made me a user of Red Hat’s OpenShift Online platform since its initial release, based on OpenShift v2. More recently, OpenShift Online has moved to the Kubernetes-based OpenShift v3 platform, and so we’ve been planning the migration of our apps onto the new platform, and into orchestrated containers.

When building OpenShift Container Platform environments for customers, I would generally have full control over the cluster and don’t get much opportunity to experience the platform as an “ordinary” user. In adopting the new Red Hat OpenShift Online service, I would be acting as a platform consumer: no special privileges, administration rights, or access to the underlying hosts, and subject to controls and restrictions on the resources available for running my apps. I hoped this shift in experience would not get in the way of creating a new home for our service.

I was really happy with OpenShift Online v2 and everything was working. The development and deployment experience was really good, and we didn’t have any problems. Usually, you don’t fix something that isn’t broken, but because OpenShift Online v2 was nearing end-of-life, migrating to v3 seemed the most sensible option. Of course, this type of activity isn’t limited to developers migrating from OpenShift Online v2 but is something that many developers may be considering as they look towards containerization and running on Kubernetes and OpenShift.

In moving our services to OpenShift Online v3, we had some choices to make about how we were going to deploy our apps. This post will cover the approaches we adopted and decisions we made along the way. First, I need to tell you a little about our application architecture, then we’ll look at how we tackled the migration challenge, including:

- Application configuration in the container

- SSL certificates and domain names

- Application components and deployments

- Enabling our development team

Service and Architecture

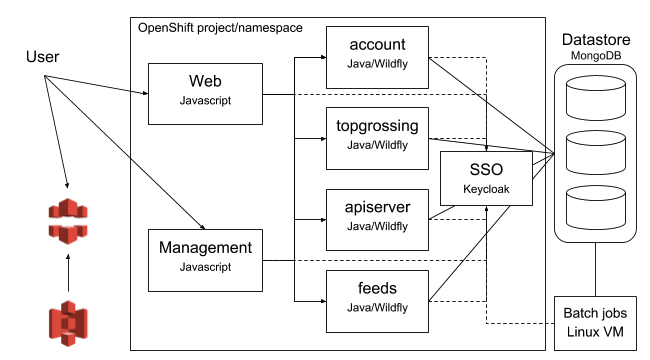

Our application and service stack is pretty simple. The frontend is written in Javascript / Angular, with a Java backend service connecting to a MongoDB datastore. We have been pleased with our NoSQL datastore choice, as it turned out our data model has been pretty dynamic; the choice has allowed us to do pretty much whatever we like with data and data structures. Wildfly hosts the Java backends, providing REST APIs used by the frontend. There are a number of backend services divided into logical components, following some microservice architecture principles. Finally, keycloak is used to provide an authentication and authorization layer around the service. Amazon Web Services like S3 and CloudFront are used to store and distribute content.

Diagram 1: Overall layout of entire servicestack

Moving applications to OpenShift

Despite OpenShift 2 being quite different from OpenShift 3 from a technology perspective, we found many operational similarities. In particular, as PaaS users, we were constrained to operate within logical sandboxes. Thankfully this helped prepare us for the migration to OpenShift 3.

Our existing build methods migrated easily to OpenShift 3. OpenShift includes a container build technology that simplifies the job of creating containers. While continuing to support traditional Dockerfile builds, OpenShift’s Source to Image (S2I) feature lets a developer create new container images by selecting an appropriate base ‘builder’ image, and matching it with a pointer to an application’s source code repository. The result is a container image ready for scheduling on the OpenShift cluster. The S2i approach not only reduces friction for developers but also helps organizations standardize their build pipelines and container layouts.

The Java backends are built using Maven. A set of ConfigMaps and Secrets are available to modify and tune app runtimes without code changes, and without baking, configuration files into the deployment. Maven is the supported build technology for the Wildfly S2I builder, so it was a simple task of creating new OpenShift build configurations to match the Wildfly S2I builder to the backends’ source code repositories. By default the Maven build runs with an ‘openshift’ profile, allowing us to add build configuration to, for example, rename the resulting WAR file to ROOT.war, so our application doesn’t have an ugly context path. One additional benefit was that this migration made it easy to upgrade the Wildfly version, from 9 to 10.

For application deployment, the environment variables were replaced by using a combination of ConfigMaps and Secrets, providing greater control, and securing sensitive information, such as passwords, more effectively.

Our existing frontend developer workflow created the static HTML, CSS, and JS locally using gulp, then committed the assets to a repo. The build configurations for these frontend components were straightforward, pairing any readily-available static web server image with our site assets from the git repo. We could automate the build process further by adopting the NodeJS S2I image, and creating a custom assemble script to match the manual steps taken by the developers, but our initial focus was simply to migrate the existing technology, not necessarily to update our approach.

My initial thought was to create a new nginx container to serve the static content and use the Dockerfile build strategy, but this is actually disabled on the multi-tenant OpenShift Online, for security reasons. We could compile a custom builder image and push it to Docker Hub and use it from there, but this would mean maintaining a base image separately, and it would add an overhead to our build and deployment pipelines. The compromise was to use the PHP builder image, which is based on Apache httpd, and it worked very well for this purpose.

Of all the service components, Keycloak proved to be the biggest challenge. We ran an older version of Keycloak on OpenShift Online v2, migrating data from this to the most recent version turned out to be rather complicated. In addition, we had chosen to use MongoDB for the Keycloak datastore, as we felt it would be better than PostgreSQL, but this complicated the migration even further. Despite this, the Keycloak documentation (http://www.keycloak.org/documentation.html) proved to be excellent in supporting the migration, and soon enough we were upgraded to the latest Keycloak.

We needed to create our own container image for Keycloak, and publish it to Docker Hub, before running it on OpenShift. We relied heavily on a blog post detailing the process of running Keycloak on OpenShift by Shoubhik Bose and the accompanying Github repo to get started.

Secrets and ConfigMaps

Secrets and configs are one of the most important parts of your environment and deployments. Ideally, containers should be immutable throughout the development life cycle; that is, the container image does not change. What we run in production is the same image we ran in development and testing. This introduces a challenge when we consider how to accommodate this principle while allowing for variations in the run-time context of each lifecycle stage. As a prime example, how do we ensure that a test instance does not connect to a production database?

The Kubernetes community has experimented with various solutions to this problem, including environment variables, using Spring active profile settings, or building a configuration system using components such as etcd, for example.

Kubernetes simplifies this task by providing two different resources that can be used in similar ways: Secrets and ConfigMaps. Both of these resources store data, strings or binaries, in key-value pairs. The data can be used in different ways, either to configure properties within the Kubernetes Pod specification, for example, populating environment variables, or being rendered as files within the running container at deployment time. When used to create files, the files are mounted within temporary file-systems created within the running container namespace, which means that these files are in-memory and never at rest on the host Kubernetes Node. The key difference between ConfigMaps and Secrets is that a Secret’s values are encoded, and not human-readable, until they are used in a container deployment. The only place a Secret’s data is at rest is within the Kubernetes datastore (etcd), which itself can be operated on an encrypted file system.

This means developers can build the container once in development, and then deploy the same container image into subsequent lifecycle stages, using Secrets and ConfigMaps to provide the runtime context for each stage. A common pattern for this approach is to map Kubernetes namespaces(referred to as Projects in OpenShift) to lifecycle stages. For example: myapp-dev, myapp-test, myapp-prod. We use staging and production namespaces, and we can provision QA namespaces on demand.

OpenShift provides a mechanism to easily manage container image pull-specs using the ImageStream resource. The ImageStream provides an abstraction which we can manage references to particular container image versions, without losing the unique reference to the image; the running pod references the full sha256 reference for the image. This is very important when you need to show or track the provenance of an image, particularly important when operating applications in production. For more information on container images in OpenShift Online see https://docs.openshift.com/online/dev_guide/managing_images.html.

Secrets

As discussed, we do not want to hardcode our database credentials into our code or container image. Using Secrets is a more appropriate method, with as either a datastore configuration or simply username and passwords pairs assigned to environment variables at runtime. I would recommend using Secrets sparingly because you would not want to include these resources in your source code repository. (Unlike your other resources which should be managed like source code). To get started, keep it simple in the beginning, managing secrets through OpenShift tools, before developing more sophisticated methods later, such as build automation, and secrets management, perhaps when your workflow requires Secrets to change frequently, and manual Secret management has become a bottleneck.

You should use secrets for any configuration data that you need to protect, such as credentials, API keys, certificates and SSL keys, for example. For more information on Secrets see https://docs.openshift.com/online/dev_guide/secrets.html

ConfigMaps

For non-sensitive configuration information, ConfigMaps are more appropriate, and easier to manage. Like Secrets, ConfigMaps consist of key-value pairs, files can be included by simply associating a key with a file’s contents. Applications must redeploy in order to make use of ConfigMap changes, but this is similar to the most externalized configuration; you need to restart the process. I would recommend that you manage the source of your configuration files via your preferred source control, ensuring that changes are properly tracked, and making it straightforward to incorporate into your preferred CI/CD pipelines. Externalising configuration as files, or environment variables, makes configuration management much easier than if the configuration is managed within a database, for example. For more information on ConfigMaps see https://docs.openshift.com/online/dev_guide/configmaps.html

SSL and domain names

OpenShift Online provides you with a default domain name for your applications, including a valid SSL certificate. However, for anything other than testing, you would probably prefer to use your own subdomain. Using a custom subdomain with OpenShift is as easy as providing a CNAME record to resolve to the default OpenShift Online subdomain, but then you must also source your own SSL certificates for this domain.

Making an OpenShift application available to external clients is done by exposing the application’s associated service. The action creates an OpenShift-specific Route resource, similar in concept to the Kubernetes Ingress resource, which manages OpenShift’s reverse proxy configuration in its routing layer. Requests for named hosts are matched against associated applications and routed accordingly.

Services such as Let’s Encrypt make obtaining valid SSL certificates free and straightforward, so there are few reasons for avoiding encryption in your applications; above all, it protects your customer’s privacy and data. In order to terminate SSL we must obtain a new valid SSL certificate for the custom hostname, or domain if obtaining a wildcard SSL certificate, and include this in our configuration. OpenShift Routes can either provide TLS termination at the routing layer, with non-SSL or re-encryption back to your application or alternatively, the routing layer can pass through for direct termination at the application.

If terminating TLS at the routing layer, we need to include our certificate and key within the Route resource object, alternatively, we can encode the certificate and key into a secret, and mount within our application Pod to be used by our application, for example in /etc/pki/tls/private. For more information on securing routes see the OpenShift Secured Routes documentation.

It is important to remember that certificates expire, and you will need to update them. In particular, Let’s Encrypt SSL certificates are valid for only 90 days, so you have to compose a strategy for how you will manage certification expirations and updates. Currently, we do it manually with the oc client tool when certificates are renewed, however, we plan to automate this in future using OpenShift utilities and automation tooling, for example, Ansible. If you would like to know more about using Let’s Encrypt certificates in Openshift, you should read this blog post.

What to expose

As discussed, the normal operation of applications within OpenShift, and indeed any Kubernetes platform, is that their network services are not externally accessible. Applications can connect with each other within the cluster by connecting using the Service resource. Kubernetes Services provide a stable endpoint for communication to application Pods and are capable of supporting tcp and udp ports with port mapping, for example, Service port 80/tcp mapping to Pod port 8080/tcp.

It is important to note that the communication can, and is often, limited: by default, OpenShift Online limits communication to only applications running within the same Kubernetes namespace, whereas OpenShift Container Platform supports Network Policy which allows developers to have fine-grained control over the cluster-based communication to their services. Limiting communication in this way is vital to ensure secure operation across not only your software development lifecycle but when operating in a multitenant environment.

It is through the Pod and Service Kubernetes primitives that we are able to build the core network architecture of our applications; wiring our applications together. All of the services remain private, such as our backend and database services; it is only the frontend and API that we choose to expose and make available to external clients. Occasionally want to temporarily access private services remotely, for example to access the database, and we can do this using the OpenShift command line utility (oc), which provides a port-forward mechanism to map a local port on your client with a port on a specific Pod, the communication is securely tunnelled through the OpenShift API.

For more information about exposing services with routes see the OpenShift document about Routes. For more information about services see the Pods and Services documentation.

What to run on OCP and where

Our OpenShift v2 application deployment included the hosting the database and its batch jobs. Despite this approach working well, we reviewed the design as part of the migration to OpenShift v3.

Perhaps the biggest change was moving the database from self-hosted to a 3rd party. The main reason for this change was simple: we wanted to minimize our management burden.

Second, we decided to move the batch jobs outside of OpenShift. Previously the batch jobs were included with the Wildfly application and were triggered as cron jobs, and this became tricky to manage as we scaled up our application servers. In the latest design, we outsource batch jobs to an external virtual machine and schedule them with cron(8). This is an area we plan to revisit and improve in the future.

Along with these changes, we plan to use Service Brokers to connect external services in the future. While OpenShift Container Platform supports the Open Service Broker API, the API is not yet available in OpenShift Online. Since we are using Amazon S3, and external, manually-configured MongoDB services, the automated binding and provisioning offered by the service brokers is attractive, and something we will consider when it becomes available.

How to get started with your team

In our example, we already had solid experience in the team with OpenShift and Kubernetes. Obviously, that helped during the migration process. But once the initial build and deployment resources were in place, the rest of the team didn’t really have to know much about OpenShift, Kubernetes, or indeed containers. They could focus on their application and its users. OpenShift reduces friction for developers. With builds and deployments triggered automatically from code commits; the platform handles the build and deployment pipeline for you, and productivity rises quickly. Developers can even get a remote shell to containers and check logs without any container related commands, right in the web browser.

Summary

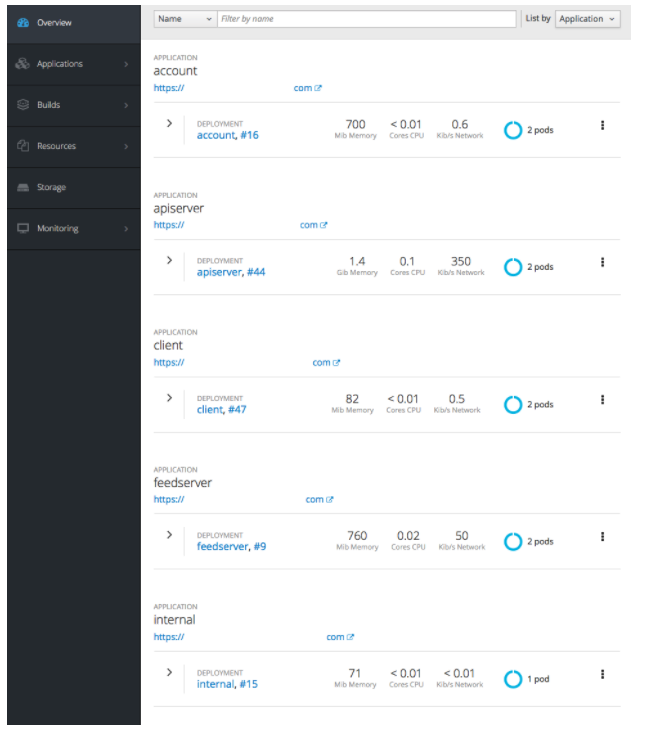

It was a great feeling when I saw all those blue circles in the OpenShift Web Console once everything was running. I actually had to take a moment, as I realized that after the many times I’d helped customers get to this point, it was the first time for me and my team.

My favorite view in OpenShift Web Console

There are so many features out-of-the-box in OpenShift: seamless deployments, Secrets, ConfigMaps, application auto-scaling and readiness and liveness probes, for example. Features that just make running services painless, and that maps the Kubernetes architecture at OpenShift’s core into the terrain application developers care about. For example, if your Java app has a memory leak, the offending container will be simply killed and replaced, without a break in service when your app is scaled to at least 2 Pods.( Of course, we’d recommend you fix your application code!)

KISS (Keep It Simple Stupid) also works with OpenShift and containers. You have all the bells and whistles to do magic with containers like A/B or Blue-Green testing. You can also create build pipelines to test your code, make coffee, and notify you on Slack when your cup is ready (HTTP 418). But you don’t have to do any of that to make your deployments painless. Just create your build configuration with the provided tools, and start from there. Keep in mind that even though CI/CD and DevOps are cool and a really hot topic, a nice build pipeline doesn’t make your application any smarter.

More information:

Managing images: https://docs.openshift.com/online/dev_guide/managing_images.html

Secrets: https://docs.openshift.com/online/dev_guide/secrets.html

Keycloak documentation: http://www.keycloak.org/documentation.html

Blog post about running Keycloak on Openshift https://medium.com/@sbose78/running-keycloak-on-openshift-3-8d195c0daaf6

Keycloak deployment project in Github https://github.com/hectorj2f/keycloak-deployment