Red Hat blog

Red Hat OpenShift 3.x supports the use of persistent storage for data that needs to be accessed beyond the lifespan of a container instance. Persistent storage is enabled through a variety of supported plug-ins, including Elastic Block Store (EBS) when operating on Amazon Web Services, Azure File/Disk on the Microsoft Cloud and iSCSI or NFS when running on premise, just to name a few examples.

There are, however, certainly occasions where applications need to access storage on systems that do not have one of the supported persistent storage plugins. This can be especially true when operating in environments that either have a large Microsoft Windows footprint, or in a legacy application that has been containerized. A mechanism to communicate with Windows storage backends is through CIFS (Common Internet File System), an implementation of the SMB (Server Message Block) protocol. Even though OpenShift does not have direct support for CIFS as a volume plug-in, storage backends, such as CIFS, can still be accessed through an alternative plug-in type called FlexVolumes.

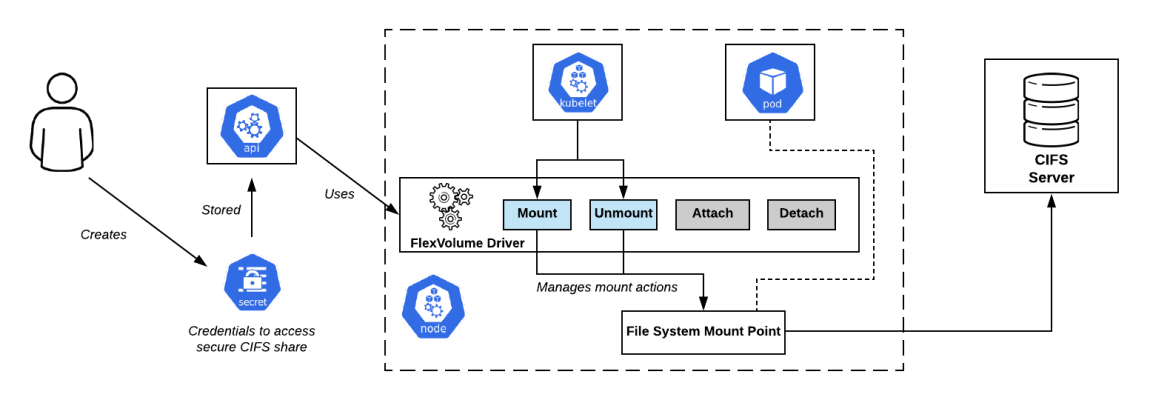

At a high level, FlexVolumes enable operators to make use of an executable script (driver) that contains the domain logic of a particular storage backend to perform the mounting and unmounting onto a node running the container. A diagram of the architecture can be found below:

While a thorough walkthrough of FlexVolumes is beyond the scope of this discussion, the following non-Red Hat articles provide the necessary information for those whom may be interested:

- An Introduction to Kubernetes FlexVolumes

- How to Create a Custom Persistent Volume Plugin in Kubernetes via FlexVolume

In this article, we will describe how to install a FlexVolume driver that manages the lifespan of a CIFS mount into an OpenShift environment followed by configuring an application that makes use of a FlexVolume volume plugin to access resources stored on a CIFS backend.

Before embarking on this specific implementation, it should be noted that other examples of a CIFS FlexVolume driver do already exist:

While these are usable in many environments, the architectural and security requirements imposed by OpenShift required an enhanced solution to be developed. Since SELinux is enabled on all machines in the cluster, additional options need to be provided at mount time to properly label the directory used to mount the share with the appropriate security content, thus enabling containers running within pods the ability to access the file system. The security mount context system_u:object_r:svirt_sandbox_file_t:s0 is applied automatically. While it could have been possible to require this mount option parameter to be specified by end users, improper configuration would result in runtime errors, thus the entire process was abstracted.

Another enhancement implemented by the driver is improved security mechanisms surrounding the management of credentials when access protected CIFS shares are required. Instead of specifying the username, password and optional domain inline as mount options, these values are instead stored in a file that can only be read by the root user and referenced as a mount option.

Each of these enhancements and more can be found in within the project repository:

https://github.com/sabre1041/openshift-flexvolume-cifs

Included is the driver itself, Red Hat Ansible based tooling to automate the installation into an OpenShift cluster, along with additional reference OpenShift configuration files.

Installing the Driver

The installation of the driver can either be facilitated manually or automated using Ansible. All FlexVolume drivers are stored onto OpenShift nodes within the /usr/libexec/kubernetes/kubelet-plugins/volume/exec/ directory. Within this folder, drivers are separated by vendor and driver name in the format <vendor>~<driver>. For this specific driver, the vendor name is openshift.io and the driver is cifs so the resulting folder that would need to be created on each node would be /usr/libexec/kubernetes/kubelet-plugins/volume/exec/openshift.io~cifs. Create the folder, then locate the cifs driver file within the flexvolume-driver folder in the project repository and copy the file to the newly created folder. Finalize the installation by restarting the OpenShift node service.

| $ systemctl restart atomic-openshift-node |

Alternatively, Ansible can be used to automate the installation process. The Ansible assets are located within the project repository in a folder called ansible where a single playbook called setup-openshift.yml and an inventory file can be found. Since the driver is to be installed on all OpenShift nodes, a single nodes group is used. If the inventory file from the OpenShift installation is available, it can be used instead. Otherwise, fill in the inventory with the OpenShift nodes and then run the playbook

| $ ansible-playbook –i <inventory> setup-openshift.yml |

Configuring the Cluster

Now that the driver has been installed, the driver is ready for use. However, by default, the FlexVolumes plugin is not available for most users. The ability to leverage a volume plugin is defined within one of the security features of OpenShift, Security Context Constraints. When a typical user launches a pod in OpenShift, the pod is associated with the restricted SCC. Aside from a limited number of volume plugins that can be used, the most common trait is that containers will run with a randomly assigned user ID. To enable FlexVolumes for users within an OpenShift environment, it is recommended that a custom SCC be created instead of modifying the built in types. Since the desired functionality is to retain the remaining security features employed in OpenShift by default, and add a new available volume plugin, as a cluster administrator, export the existing restricted SCC to a file:

| $ oc get --export scc restricted –o yaml > restricted-flexvolume-scc.yml |

Open the restricted-flexvolume-scc.yml file. First, change the name of the SCC from restricted to restricted-flexvolume within the metadata section.

| name: restricted-flexvolume |

Next, since the restricted SCC is automatically applied to authenticated users by default, this permission is defined by the groups property. Locate this property, remove system:authenticated and replace it with an empty array as shown below:

| groups: [] |

Finally, scroll to the bottom of the file where the list of enabled volume plugins can be found. Add the following to the list of enabled plugins:

| - flexVolume |

Save the file and create the new SCC in the cluster:

| $ oc create –f restricted-flexvolume-scc.yml |

Confirm the new SCC is installed in the cluster:

| $ oc get scc restricted-flexvolume NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP PRIORITY READONLYROOTFS VOLUMES restricted-flexvolume false [] MustRunAs MustRunAsRange MustRunAs RunAsAny <none> false [configMap downwardAPI emptyDir flexVolume persistentVolumeClaim projected secret] |

Confirm flexVolume is in available as a listed volume.

Accessing a CIFS Share from an Application

Note: This section describes how to connect an application running on OpenShift with an existing CIFS share that you will need to provide. It is recommended that the share be protectedsecured using a username/password combination with root group write enabled support OpenShift’s security model.

With the CIFS driver installed and the cluster configured, a sample application can be used to demonstrate a integration. First, create a new project called “cifs-flexvolume-demo”:

| $ oc new-project cifs-flexvolume-demo |

As described earlier, since all containers and their associated service accounts make use of the restricted SCC by default, additional action is required in order to have the sample application utilize FlexVolumes. Execute the following command to grant the default service account in the cifs-flexvolume-demo project access to the restricted-flexvolume SCC:

| $ oc adm policy add-scc-to-user restricted-flexvolume –z default |

Support is available for accessing shares requiring authentication using this FlexVolume driver. Credentials are stored as a secret, declared within the volume definition and exposed to the driver at mount time. An OpenShift template called cifs-secret-template.yml is available in the examples folder to help streamline the secret creation process. Execute the following command passing in the appropriate credentials for the CIFS share in the following command:

| $ oc process –p USERNAME=<username> -p PASSWORD=<password> -f examples/cifs-secret-template.yml | oc apply –f- |

A new secret called cifs-credentials should have been created.

With all of the prerequisite steps complete, the sample application can be deployed. The application consists of a simple Red Hat Enterprise Linux container that has been configured with a sleep loop to help it remain up and active. The goal is for you to be able to use a remote terminal to explore the container file system and confirm the ability to access the CIFS share. A template called application-template.yml is also available in the examples folder. Open the template file and take note of the volumes section:

| volumes: - name: cifs flexVolume: driver: openshift.io/cifs fsType: cifs secretRef: name: ${SECRET} options: networkPath: "${NETWORK_PATH}" mountOptions: "${MOUNT_OPTIONS}" |

Notice the flexVolume plugin type and the parameters contained within the definition:

- Driver name correlates to the combination of the vendor and driver as configured on each node previously

- Name within the secretRef field will refer to the name of the secret containing the credentials a configured previously

- networkPath and mountOptions are provided as template parameters and configured as necessary. Review the default parameters (where available) at the bottom of the file.

Instantiate the template by providing the path to the CIFS share in the format “//<server>/<share>” by executing the following command:

| $ oc process –p MOUNT_PATH=”<network_path>” -f examples/ | oc apply –f- |

A new deployment of the application should have been triggered. If the volume mounted successfully, the pod will transition into a Running state. Otherwise, it will remain with a status of ContainerCreating. If there were issues mounting the share, by executing an “oc describe <pod_name>”, a timeout error waiting for the volume to attach to the container would be shown. Since the actual mounting occurs on the node where the container is ultimately being run, inspecting the journald logs for the atomic-openshift-node service will most likely identify the ultimate cause of any issues:

| $ journalctl –u atomic-openshift-node |

Assuming there were no issues mounting the share to the container, the pod will transition to a running state.

The final step is to verify the share is accessible within the container. Obtain a remote shell session within the container by first locating the name of the running pod and starting the remote session:

| $ oc get pods $ oc rsh <pod_name> |

The DeploymentConfig specifies that the CIFS mount be available within the container at “/mnt/cifs”. Navigate to this directory and touch a file.

| $ cd /mnt/cifs $ touch openshift.txt |

Confirm the file is available within the network directory to verify the successful integration of CIFS shares and OpenShift.

By making use of FlexVolumes and the CIFS driver, additional storage options are now possible. Given the fact that CIFS is a shared file protocol, it enables the use of a storage type that is common in many applications and expands the footprint of applications that can be utilized within OpenShift.

About the author

Andrew Block is a Distinguished Architect at Red Hat, specializing in cloud technologies, enterprise integration and automation.