Red Hat blog

Authors: Davis Phillips, Annette Clewett

Kubernetes Federation's objective is to provide a control plane to manage multiple Kubernetes clusters. Unfortunately, Federation is still considered an alpha project with no timeline for General Availability release. As a stop gap for Federation services a couple of different solutions are available for dispersing cluster endpoints: a cluster stretched across multiple datacenters or multiple clusters deployed across datacenters.

Kubernetes recommends that all VMs be isolated to a single datacenter: “when the Kubernetes developers are designing the system (e.g. making assumptions about latency, bandwidth, or correlated failures) they are assuming all the machines are in a single data center, or are otherwise closely connected.” Therefore, stretching an OpenShift Cluster Platform across multiple data centers is not recommended. However if you need to have a disaster recovery plan today this article will detail a potential solution.

Some design considerations that are important to keep in mind concerning stretching a cluster across multiple datacenters:

- Network latency & bandwidth

- Providing a storage solution across multiple data centers

- Disaster recovery for etcd and storage GlusterFS

- Application placement

As bulleted above, storage will need to be replicated to any number of datacenters. While some storage providers have replication services to solve this, this is usually an asynchronous replication that does not provide read/write (RW) access at all locations. In this design, Red Hat OpenShift Container Storage (RHOCS) in converged mode was chosen for its robust replication capabilities and tight integration with OpenShift.

RHOCS was formerly called Container-Native Storage and utilizes Red Hat GlusterFS. GlusterFS is an open-source software-based network-attached filesystem that deploys on commodity hardware.

Additionally, the idea of a quorum is the toughest obstacle in the design of an OpenShift stretch cluster. etcd and RHOCS uses a 2n+1 scheme for cluster members and a cluster of n members can tolerate (n-1)/2 permanent failures. A 3 node cluster can only tolerate the loss of a single node. Ideally, this design consideration would be solved via a 3 datacenter design.

GlusterFS REQUIRES a latency connection of 5 milliseconds RTT or lower. Unfortunately, most customers do not have three datacenters with the required connectivity. This required latency, less than 5 milliseconds RTT, can be found using a metropolitan area network (MAN) interconnecting the cluster in a geographic area or region. A stretched OpenShift cluster with higher latency than 5 milliseconds RTT between nodes was found to fail health checks for persistent applications.

OpenShift Stretch Cluster Configuration

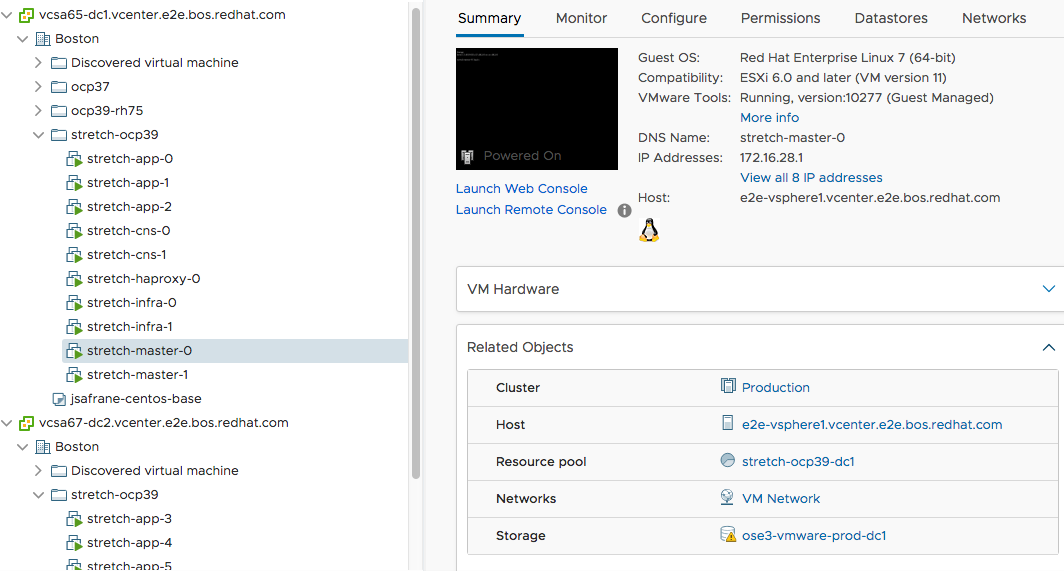

This test environment consisted of two vSphere clusters (6.x) deployed on a single VLAN across two blade enclosures to simulate multiple datacenters. The underlying storage utilized two iSCSI LUNs, one assigned to each vSphere cluster for persistent storage.

VMware vSphere was used as the platform for deployment, a commonly used on-premise cloud provider. Other IaaS platforms could be utilized provided the requirements from above are all met for compute, storage and network.

Figure 1.

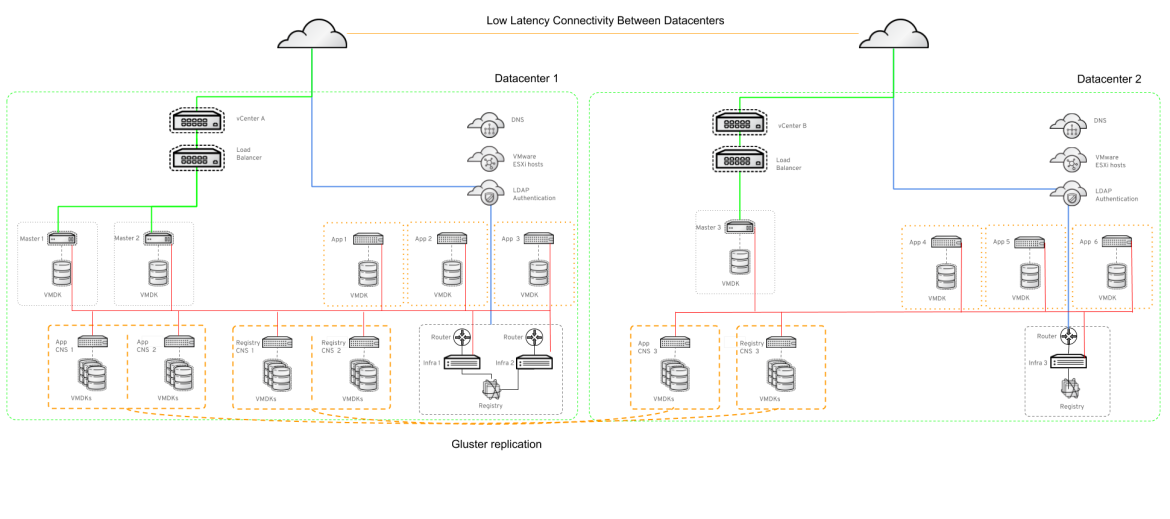

The RHEL virtual machines were created and OpenShift 3.9 was installed using relevant Ansible playbooks. The basic premise is all masters run etcd. Two masters are located in DC1 and one in DC2. Additionally, the OpenShift nodes for storage (GlusterFS PODs) are positioned in a similar fashion, two in DC1 and one in DC2. Layout shown in Figure 2.

Figure 2.

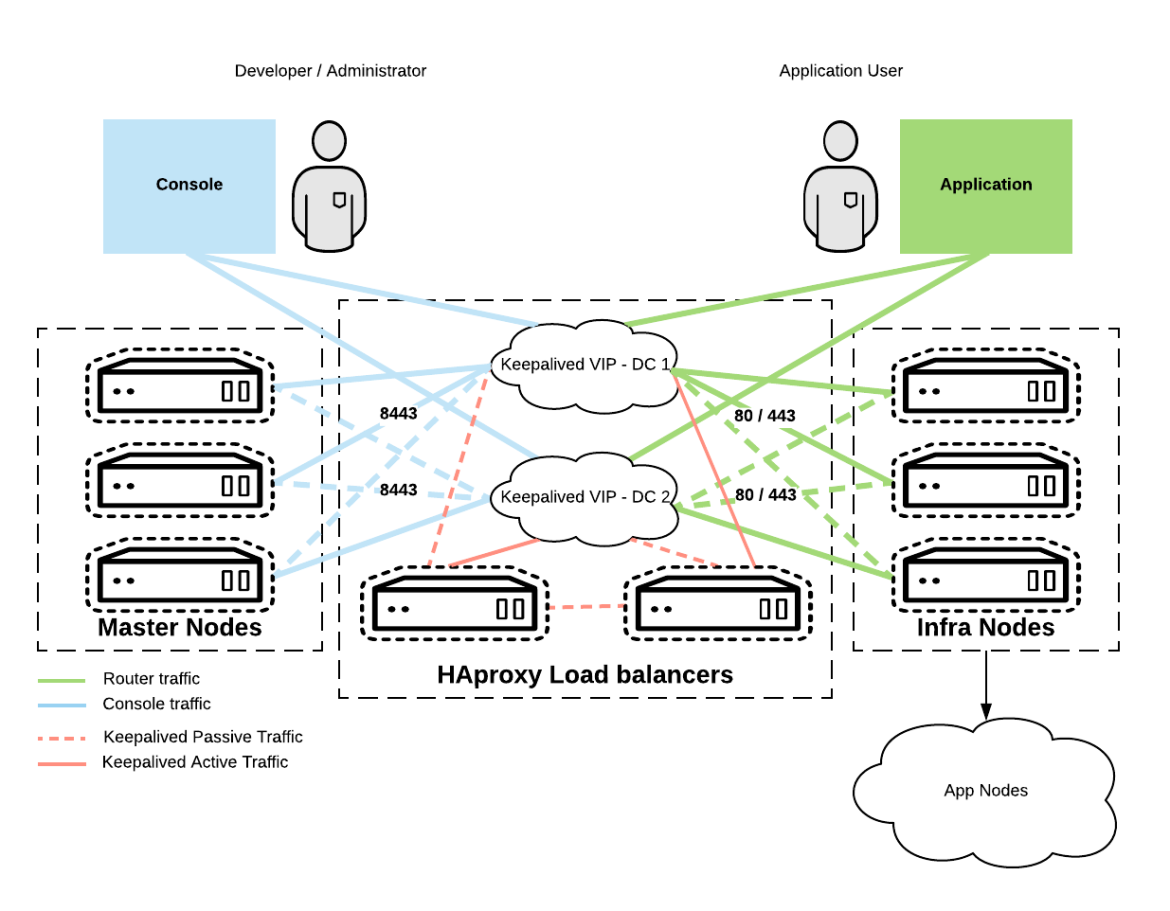

HAproxy Configuration

In an attempt to simulate geographically dispersed DNS, two records were created to represent endpoints destined for DC1 or DC2. Each HAproxy node owns that virtual IP address. KeepaliveD monitors for failover between either datacenter. The configuration resembles an active/active cluster configuration. Lastly, the HAproxy servers prefer to offer from their service pools in their home datacenter.

Example:

$ dig +noall +answer stretch-haproxy.example.com

stretch-haproxy.example.com. 1800 IN A 10.x.y.20

stretch-haproxy.example.com. 1800 IN A 10.x.y.21

KeepaliveD VIP Data center 1 - 10.x.y.20

KeepaliveD VIP Data center 2 - 10.x.y.21

Figure 3 below highlights the HAproxy configuration used for the stretch cluster:

Figure 3.

Disaster Recovery Scenarios

As you can see in Figure 2, the test environment was an OpenShift deployment stretched across Datacenter 1 (DC1) and Datacenter 2 (DC2). The network between DC1 and DC2 in this case was a MAN (Metropolitan Network) so there were no bandwidth restrictions and latency was approximately 5 milliseconds RTT.

DC1 had two of the three OpenShift Container Platform (OCP) nodes with GlusterFS PODs and DC2 had the other POD. The goal was to recover after an outage of DC1. The persistent storage needs to continue to work for read/write (RW) in a scenario where neither GlusterFS nor etcd have quorum.

After reviewing several different failure scenarios based on conversations with users, the goal was to account for recovery operations that include infrastructure, applications, and the OpenShift control plane. The following section describes some of these failure scenarios in more detail.

Datacenter 2 Failure: Quorum Remains

In the event of failure for DC2, DC1 remains online and fully operational. However, there is a single node failure in both GlusterFS and etcd. Any workloads running in DC2 will be rescheduled for DC1.

The etcd cluster remains RW so the OpenShift cluster is responsive. The MySQL POD will stay online (provided the POD is running in DC1) and able to RW data to the mounted GlusterFS volume. The MySQL POD will be rescheduled in DC1 eventually and will mount the same GlusterFS volume.

For the storage, there are three OpenShift nodes hosting GlusterFS PODs and one of the PODs is offline. Therefore all of the GlusterFS volumes are in a degraded state but still accepting RW. Once DC2 is returned to operational state, the third replica for every volume at DC2 will be healed with any changed data during the outage of DC2.

Datacenter 1 Failure: Quorum Failure

In the event of failure for DC1, DC2 remains responsive. However, there is now quorum failure for both GlusterFS and etcd. Both services must be recovered for any persistent PODs to remain operational for RW workloads. Also, any workloads lost in DC1 will not be rescheduled in DC2 until etcd is recovered. At this time PODs using ephemeral storage will become operational but any PODs using GlusterFS persistent storage will be in an Error state until the storage is recovered as well.

Datacenter 1 Failure: Recovering etcd

NOTE: Backing up etcd is outside of the scope of this article. Please see the link below for more information regarding that. The etcd recovery process is different with a containerized version of etcd. OpenShift installations later than 3.9 will need to examine the following online docs for information.

First, etcd is offline and the cluster API is no longer processing requests. It must be recovered first. The following commands will create a RW single node etcd cluster with the remaining etcd member:

Stop the services:

If etcd is an installed service (default OCP 3.9 and below)

# systemctl stop etcd.service

If etcd is a containerized service (default OCP 3.10 and above)

# mv /etc/origin/node/pods/etcd.yaml ~/etcd.yaml

Add the following line to the etcd.conf file:

# echo "ETCD_FORCE_NEW_CLUSTER=true" >> /etc/etcd/etcd.conf

Start the service; If etcd is an installed service (default OCP 3.9 and below):

# systemctl start etcd.service

Start the service; If etcd is a containerized service (default OCP 3.10 and above):

# mv ~/etcd.yaml /etc/origin/node/pods/etcd.yaml

Remove the line that was added above.

# sed -i '/^ETCD_FORCE_NEW_CLUSTER=/d' /etc/etcd/etcd.conf

Restart the service; If etcd is an installed service (default OCP 3.9 and below):

# systemctl restart etcd.service

Restart the service; If etcd is a containerized service (default OCP 3.10 and above):

# mv /etc/origin/node/pods/etcd.yaml ~/etcd.yaml && sleep 30

# mv ~/etcd.yaml /etc/origin/node/pods/etcd.yaml

The cluster should now be operational and oc commands should properly respond.

# source /etc/etcd/etcd.conf

# etcdctl --cert-file=$ETCD_PEER_CERT_FILE --key-file=$ETCD_PEER_KEY_FILE \

--ca-file=/etc/etcd/ca.crt --endpoints=$ETCD_LISTEN_CLIENT_URLS cluster-health

member 59df5107484b84df is healthy: got healthy result from https://192.168.1.2:2379

cluster is healthy

# oc get nodes

..output omitted..

Datacenter 1 Failure: Recovering GlusterFS

Secondly, GlusterFS volumes must be recovered for workloads with persistent storage. With quorum lost all volumes will be in a Read Only (RO) state. The following options must be added to all existing GlusterFS volumes for RW functionality to be restored:

cluster.quorum-type=fixed

cluster.quorum-count=1

Every volume in the GlusterFS cluster will need to be configured with these options to transition from RO to RW.

# oc get pod -n app-storage | grep glusterfs

glusterfs-storage-cnvpj 1/1 Running 0 1d

# oc rsh -n app-storage glusterfs-storage-cnvpj

sh-4.2# gluster vol list

glusterfs-registry-volume

heketidbstorage

vol_c1381534e3779fe2fad1e52b0f1ad148

...

sh-4.2# volume set vol_c1381534e3779fe2fad1e52b0f1ad148 cluster.quorum-type fixed

sh-4.2# volume set vol_c1381534e3779fe2fad1e52b0f1ad148 cluster.quorum-count 1

Alternatively, all of the existing GlusterFS volumes can be recovered with the following loop:

sh-4.2# for vol in $(gluster vol list); do gluster vol set $vol cluster.quorum-type fixed && gluster vol set $vol cluster.quorum-count 1 ; done

Now, all workloads originally in DC1 will become operational in DC2 with RW access to GlusterFS persistent storage. All workloads running in DC2 should also now be online and functional.

Datacenter 1 Failback: etcd and GlusterFS

After DC1 is fully operational again, etcd and GlusterFS must be restored to their original state before the failure of DC1.

The etcd node recovery process is different with a containerized version of etcd. OpenShift installations later than 3.9 will need to examine the following online docs for information.

NOTE: These steps should be performed on the nodes that need to be restored.

Stop the services:

If etcd is an installed service (default OCP 3.9 and below)

# systemctl stop etcd.service

If etcd is a containerized service (default OCP 3.10 and above)

# mv /etc/origin/node/pods/etcd.yaml ~/etcd.yaml

Add back recovered etcd nodes with etcdctl. The -C should point to the running etcd node:

# etcdctl -C https://192.168.1.2:2379 --cert-file /etc/etcd/peer.crt --key-file /etc/etcd/peer.key --ca-file /etc/etcd/ca.crt member add master2.example.com https://192.168.1.3:2380

Added member named master2.example.com with ID 771d56e9a13a9ad2 to cluster

ETCD_NAME="master2.example.com"

ETCD_INITIAL_CLUSTER="master2.example.com=https://192.168.1.3:2380,master-1.example.com=https://192.168.1.2:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

Remove any existing etcd data:

# rm -Rf /var/lib/etcd/*

Add the name back to the etcd.conf file (this may be unchanged):

# sed -i '/^ETCD_NAME=/d' /etc/etcd/etcd.conf

# echo "ETCD_NAME=master2.example.com" >> /etc/etcd/etcd.conf

The returned value from the above command should be added to the etcd.conf file for that node:

# sed -i '/^ETCD_INITIAL_CLUSTER=/d' /etc/etcd/etcd.conf

# echo "ETCD_INITIAL_CLUSTER=master2.example.com=https://192.168.1.3:2380,master-1.example.com=https://192.168.1.2:2380" >> /etc/etcd/etcd.conf

Lastly, tell the nodes that they are joining an existing cluster:

# sed -i '/^ETCD_INITIAL_CLUSTER_STATE=/d' /etc/etcd/etcd.conf

# echo "ETCD_INITIAL_CLUSTER_STATE=existing" >> /etc/etcd/etcd.conf

Start the service; If etcd is an installed service (default OCP 3.9 and below):

# systemctl restart etcd.service

Start the service; If etcd is a containerized service (default OCP 3.10 and above):

# mv ~/etcd.yaml /etc/origin/node/pods/etcd.yaml

The cluster should now be showing the newly added node.

# source /etc/etcd/etcd.conf

# etcdctl --cert-file=$ETCD_PEER_CERT_FILE --key-file=$ETCD_PEER_KEY_FILE \

--ca-file=/etc/etcd/ca.crt --endpoints=$ETCD_LISTEN_CLIENT_URLS cluster-health

member 59df5107484b84df is healthy: got healthy result from https://192.168.1.2:2379

member 771d56e9a13a9ad2 is healthy: got healthy result from https://191.168.1.3:2379

cluster is healthy

NOTE: Perform this step for each restored etcd node.

Next, now that the two GlusterFS PODs are back online the GlusterFS volume settings need to be set back to default as shown below.

May require adding back glusterfs=storage=host or glusterfs=registry-host label to OCP nodes that were offline in DC2.

Next, now that the GlusterFS PODs are back online the GlusterFS volume settings need to be set back to default as shown below.

cluster.quorum-type=auto

cluster.quorum-count=3

sh-4.2# for vol in $(gluster vol list); do gluster vol set $vol cluster.quorum-type auto && gluster vol set $vol cluster.quorum-count 3 ; done

After DC1 is returned to an operational state, all GlusterFS volume changes will be copied to the DC1 volume replicas thereby healing the cluster.

Thoughts and Conclusions:

As outlined in this article, the Metro Stretch Cluster solution does provide disaster recovery. Given your organization's requirements for Recovery Time Objective (RTO) and Recovery Point Objective (RPO) extensive testing and validation needs to be done before implementation.

Also, all use cases are not a good fit for this scenario. The manual recovery process involved with both etcd and RHOCS provides additional operational overhead at a time of crisis. Another concern for operations is application performance. If the cluster is heavily utilized with limited capacity and headroom, the increased workload in the surviving datacenter (after the failover and recovery) could cause unacceptable service levels and unhappy customers.

This post explains the pros and cons of stretching an OpenShift cluster across datacenters. Kubernetes Federation is around the corner. But, the design described above can serve as acceptable stop gap until its release.The information in this article provides some requirements, considerations and conclusions for implementing this design these items should be considered with your own application use cases and architectures for making deployment decisions.

Plan the cluster design appropriately. At the moment, there is no transition from a stretch cluster to multi-cluster with Federation.