Red Hat blog

Kubeflow is an open source machine learning toolkit for Kubernetes. It bundles popular ML/DL frameworks such as TensorFlow, MXNet, Pytorch, and Katib with a single deployment binary. By running Kubeflow on Red Hat OpenShift Container Platform, you can quickly operationalize a robust machine learning pipeline. However, the software stack is only part of the picture. You also need high performance servers, storage, and accelerators to deliver the stack’s full capability. To that end, Dell EMC and Red Hat’s Artificial Intelligence Center of Excellence recently collaborated on two white papers about sizing hardware for Kubeflow on OpenShift.

The first whitepaper is called “Machine Learning Using the Dell EMC Ready Architecture for Red Hat OpenShift Container Platform.” It describes how to deploy Kubeflow 0.5 and OpenShift Container Platform 3.11 on Dell PowerEdge servers. The paper builds on Dell’s Ready Architecture for OpenShift Container Platform 3.11 -- a prescriptive architecture for running OpenShift Container Platform on Dell hardware. It includes a bill of materials for ordering the exact servers, storage and switches used in the architecture. The machine learning whitepaper extends the ready architecture to include workload-specific recommendations and settings. It also includes instructions for configuring OpenShift and validating Kubeflow with a distributed TensorFlow training job.

Kubeflow is developed on upstream Kubernetes, which lacks many of the security features enabled in OpenShift Container Platform by default. Several of OpenShift Container Platform default security controls are relaxed in this whitepaper to get Kubeflow up and running. Additional steps might be required to meet your organization’s security standards for running Kubeflow on OpenShift Container Platform in production. These steps may include defining cluster roles for the Kubeflow services with appropriate permissions, adding finalizers to Kubeflow resources for reconciliation, and/or creating liveness probes for Kubeflow pods.

The second whitepaper is called “Executing ML/DL Workloads Using Red Hat OpenShift Container Platform v3.11.” It explains how to leverage Nvidia GPUs with Kubeflow for best performance on inferencing and training jobs. The hardware profile used in this whitepaper is similar to the ready architecture used in the first paper except the servers are outfitted with Nvidia Tesla GPUs. The architecture uses two GPU models. The OpenShift worker nodes have Nvidia Tesla T4 GPUs. Based on the Turing architecture, the T4s deliver excellent inference performance in a 70-Watt power profile. The storage nodes have Nvidia Tesla V100 GPUs. The V100 is a state of the art data center GPU. Based on the Volta architecture, the V100s are deep learning workhorses for both training and inference.

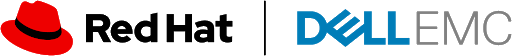

The researchers compared the GPU models when training the Resnet50 TensorFlow benchmark. This is shown in the figure above. Not surprisingly, the Tesla V100s outperformed the T4s when training. They have double the compute capability -- both in terms of FP64 and TensorCores -- along with higher memory bandwidth due to the HBM2 memory subsystem. But the T4s should give better performance per Watt than the V100s when running less floating-point intensive tasks, particularly inferencing in mixed precision.

These whitepapers make it easier for you to select hardware for running Kubeflow on premises. Dell and Red Hat are continuing to collaborate on updating these documents to the latest version of Kubeflow and OpenShift Container Platform 4.

About the author

Red Hatter since 2018, tech historian, founder of themade.org, serial non-profiteer.