Red Hat blog

Red Hat OpenShift Container Platform is changing the way that clusters are installed, and the way those resulting clusters are structured. When the Kubernetes project began, there were no extension mechanisms. Over the last four-plus years, we have devoted significant effort to producing extension mechanisms, and they are now mature enough for us build systems upon. This post is about what that new deployment topology looks like, how it is different from what came before, and why we’re making such a significant change.

Pre-managed (3.x) Topology

In 3.x, we developed a traditional product deployment topology, driven in large part by the fact that Kubernetes, at the time, had no extension mechanism. In this topology, the control plane components are installed onto hosts, then started on the hosts to provide the platform to other components and the end user workloads. This matched the general expectations for running enterprise software, and allowed us to build a traditional installer, but it forced us to make a few compromises that ended up making things more difficult for the user..

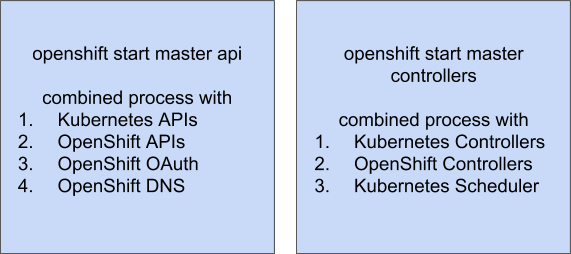

Without an extension mechanism, we combined OpenShift-specific control plane components and Kubernetes control plane components into single binaries. While they are externally compatible and seemingly simple, this layout can be confusing if you are used to a “standard” kubernetes deployment.

Without an extension mechanism, we combined OpenShift-specific control plane components and Kubernetes control plane components into single binaries. While they are externally compatible and seemingly simple, this layout can be confusing if you are used to a “standard” kubernetes deployment.

Managed (4.x) Topology

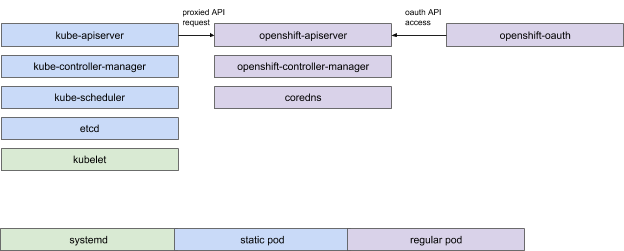

Kubernetes now has a robust set of extension mechanisms suitable for different needs and in Red Hat OpenShift Container Platform 4, we decided to make use of them. The result is a topology that has a lot more pieces, but each piece is a discrete unit of function focused on doing a single thing well and running on the platform.

Don’t panic. Separating out all these individual units initially seems like a big step backwards in terms of complexity, but the best measure of a system isn’t simplicity, it is understandability. One binary doing a single thing, failing independently, and clearly reporting that failure is easier to administer than a anything which doesn’t.

Improved reliability

Having a binary doing a single thing makes determining the health of that binary much easier than if that binary is performing multiple functions. This is such a core principle of Kubernetes that we included it in the bedrock of pods: health checks.

If you have a combined binary, it can be scary to report it as unhealthy and restart it; this is because if only part of it is unhealthy, you could do more harm than good to your operations. By separating different functions into separate binaries, we are able to turn discrete components off and back on again side effects.

Bugs happen. When a particular unit of function inevitably starts to fail, it can no longer be able to bring down unrelated functions by crashing a combined binary.

Faster fixes

By separating components, the interactions between them are reduced to API interactions, making it more approachable for developers. When changes are made to any one binary, the developer can be assured as to the state of the other systems in play during testing and during production rollouts, allowing them to isolate change-related problems faster. This gives us faster and more focused fixes for bugs.

More like upstream Kubernetes

Separating our topology makes OpenShift’s relationship to Kubernetes much clearer, specifically as a set of security-related customizations and then a set of extensions that provide the additional experience. This separation reduces the barrier to switching vendors, and eases common challenges to adopting OpenShift.

About the author

Red Hatter since 2018, tech historian, founder of themade.org, serial non-profiteer.