Red Hat blog

This is the second part of a two-part blog series discussing software deployment options on OpenShift leveraging Helm and Operators. In the previous part we’ve discussed the general differences between the two technologies. In this blog we will specifically the advantages and disadvantages of deploying a Helm chart directly via the helm tooling or via an Operator.

Part II - Helm charts and Helm-based Operators

Users that already have invested in Helm to package their software stack, now have multiples options to deploy this on OpenShift: With the Operator SDK, Helm users have a supported option to build an Operator from their charts and use it to create instances of that chart by leveraging a Custom Resource Definition. With Helm v3 in Tech Preview and helm binaries shipped by Red Hat, users and software maintainers now have the ability to use helm directly on OpenShift cluster.

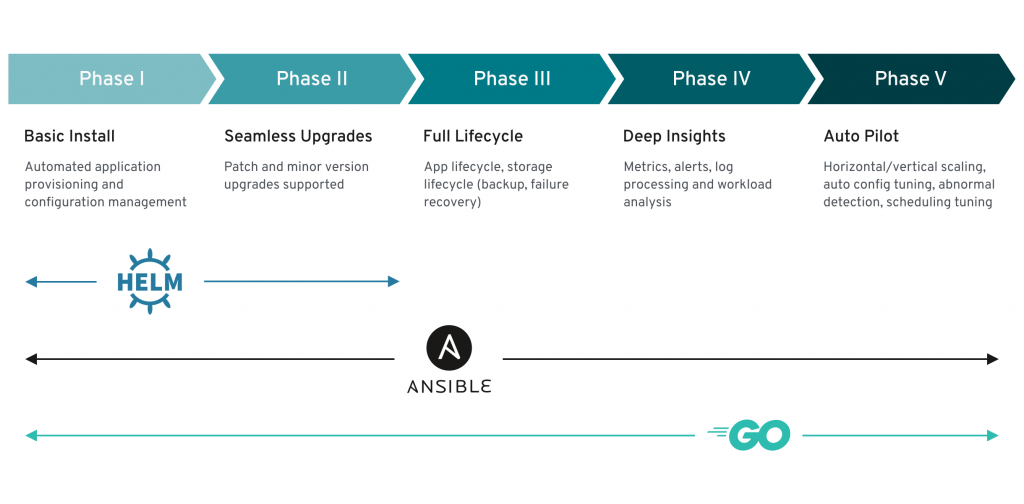

When comparing Helm charts and Helm-based Operators, in principle the same considerations as outlined in the first part of this series apply. The caveat is that in the beginning, the Helm-based Operator does not possess advanced lifecycle capabilities over the standalone chart itself. There however are still advantages.

Your Helm Chart as an Operator

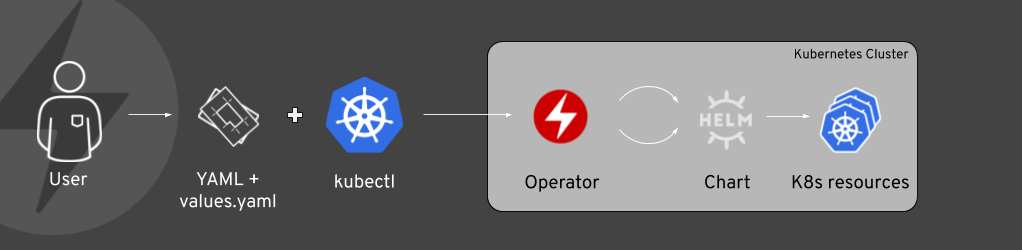

With helm-based Operators, a Kubernetes-native interface exists for users on cluster to create helm releases. Using a Custom Resource, an instance of the chart can be created in a namespace and configured through the properties of the resource. The allowed properties and values are the same as the values.yaml of the chart, so users familiar with the chart don’t need to learn anything new. Since internally a Helm-based Operator uses the Helm libraries for rendering, any type of chart type and helm feature is supported. Users of the Operator however don't necessarily need the helm CLI to be installed but just kubectl to be present in order to create an instance. Consider the following example:

apiVersion: charts.helm.k8s.io/v1alpha1

kind: Cockroachdb

metadata:

name: example

spec:

Name: cdb

Image: cockroachdb/cockroach

ImageTag: v19.1.3

Replicas: 3

MaxUnavailable: 1

Component: cockroachdb

InternalGrpcPort: 26257

ExternalGrpcPort: 26257

InternalGrpcName: grpc

ExternalGrpcName: grpc

InternalHttpPort: 8080

ExternalHttpPort: 8080

HttpName: http

Resources:

requests:

cpu: 500m

memory: 512Mi

Storage: 10Gi

StorageClass: null

CacheSize: 25%

MaxSQLMemory: 25%

ClusterDomain: cluster.local

UpdateStrategy:

type: RollingUpdate

The Custom Resource Cockroachdb is owned by the CockroachDB operator which has been created using the CockroachDB helm chart. The entire .spec section can essentially be a copy and paste from the values.yaml of the chart. Any value supported by the chart can be used here. Values that have a default are optional.

The Operator will transparently create a release in the same namespace where the Custom Resource is placed. Updates to this object cause the deployed Helm release to be updated automatically. This is in contrast to Helm v3, where this flow originates from the client side and installing and upgrading a release are two distinct commands.

While a Helm-based Operator does not magically extend the lifecycle management capabilities of Helm it does provide a more native Kubernetes experience to end users, which interact with charts like with any other Kubernetes resource.

Everything concerning an instance of a Helm chart is consolidated behind a Custom Resource. As such, access to those can be enforced restricted via standard Kubernetes RBAC, so that only entitled users can deploy certain software, irrespective of their privileges in a certain namespace. Through tools like the Operator Lifecycle Manager, a selection of vetted charts can be presented as a curated catalog of helm-based Operators.

Resiliency and Simplicity

As the Helm-based Operator constantly applies releases, manual changes to chart resources are automatically rolled back and configuration drift is prevented. This is different to using helm directly, where deleted objects are not detected and modified chart resources are only merged and not rolled back. The latter does not happen until a user runs the helm utility again. Dealing with a Kubernetes Custom Resources however may also present itself as the easier choice in GitOps workflows where only kubectl tooling is present.

When installed through the Operator Lifecycle Manager, a Helm-based Operator can also leverage other Operators services, by expressing a dependency to them. Manifests containing Custom Resources owned by other Operators can simply be made part of the chart. For example, the above manifest creating a CockroachDB instance could be shipped as part of another helm chart, that deploys an application that will write to this database.

When such charts are converted to an Operator as well, OLM will take care of installing the dependency automatically, whereas with Helm this is the responsibility of the user. This is also true for any dependencies expressed on the cluster itself, for example when the chart requires certain API or Kubernetes versions. These may even change over the lifetime of a release. While such out-of-band changes would go unnoticed by Helm itself, OLM constantly ensures that these requirements are fulfilled or clearly signals to the user when they are not.

Applying least-privilege principles

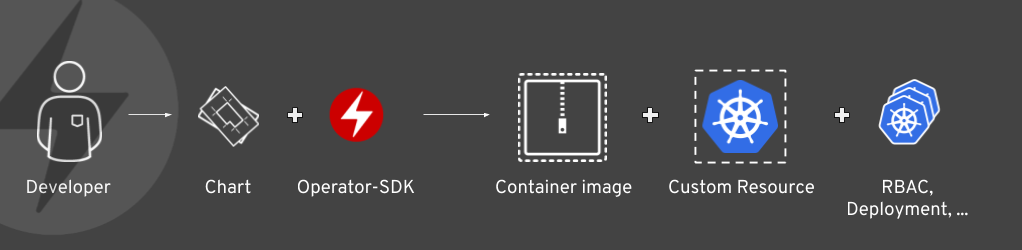

On the flip side, a new Helm-based Operator has to be created, published to catalog and updated on cluster whenever a new version of the chart becomes available. In order to avoid the same security challenges Tiller had in Helm v2, the Operator should not run with global all-access privileges. Hence, the RBAC of the Operator is usually explicitly constrained by the maintainer according to the least-privilege principle.

The SDK attempts to generate the Operator’s RBAC rules automatically during conversion from a chart but manual tweaks might be required. The conversion process at a high level looks like this:

Restricted RBAC now applies to Helm v3: chart maintainers need to document the required RBAC for the chart to be deployed since it can no longer be assumed that cluster-admin privileges exist through Tiller.

Quite recently the Operator-SDK moved to Helm v3. This is a transparent change for both users and chart maintainers. The SDK will automatically convert existing v2 releases to v3 once an updated Operator is installed.

Verdict

In summary: end users that have an existing Helm charts at hand can now deploy on OpenShift using helm tooling, assuming they have enough permissions. Software maintainers can ship their Helm charts unchanged now to OpenShift users as well.

Using the Operator SDK they get more control over the user and admin experience by converting their chart to an Operator. While the resulting Operator eventually deploys the chart in the same way the Helm binary would, it plays along very well into with the rest of the cluster interaction using just kubectl, Kubernetes APIs and proper RBAC, which also drives GitOps workflows. On top of that there is transparent updating of installed releases and constant remediation of configuration drift. Clusters

Helm-based Operators also integrate well with other Operators through the use of OLM and its dependency model, avoiding re-inventing how certain software is deployed. Finally for ISVs, Helm-based Operators present an easy entry into the Operator ecosystem without any change required to the chart itself.