Red Hat blog

With the release of Red Hat OpenShift 4, the concept of User Provisioned Infrastructure (UPI) has emerged to encompass the environments where the infrastructure (compute, network and storage resources) that hosts the OpenShift Container Platform is deployed by the user. This allows for more creative deployments, while leaving the management of the infrastructure to the user. This allows us to deploy OpenShift Container Platform 4.1 on a mix of virtual and bare metal machines.

A few words on why we are deploying on a mixed environment and not only bare metal or virtual machines. On one hand, virtual machines provide additional protection because they can be migrated live to avoid downtime during maintenance. They can also be automatically restarted in case of hypervisor failure, while bare metal machines mainly rely on backup/restore procedures. So, our most precious assets can benefit from running on virtual machines. Bare metal on the other hand provides raw power without the virtualization overhead and extra cost, and can also provide higher density. In the context of OpenShift, a hybrid deployment offers the security and high availability of virtualization for the control plane, with the power and lower cost of bare metal for the applications.

OpenShift Architecture

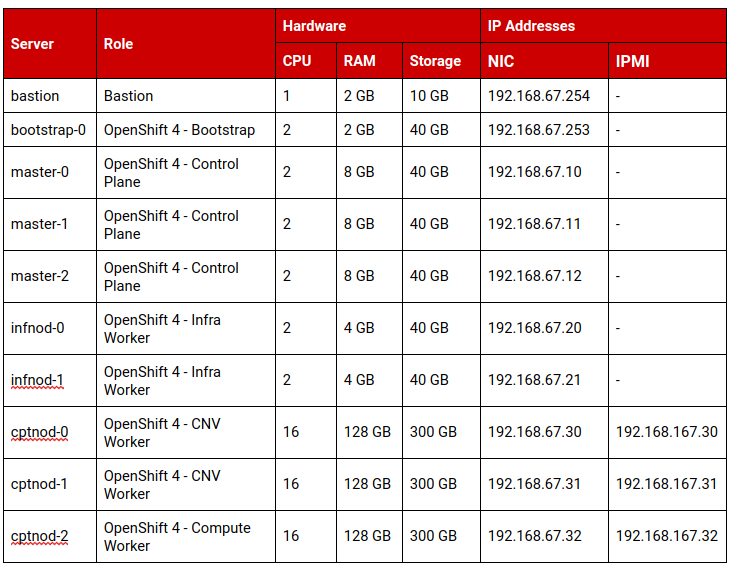

Let's start by describing the platform. A standard deployment of OpenShift Container Platform 4.1 will comprise the following machines:

- Bootstrap - a temporary machine that runs a minimal Kubernetes used to deploy the OpenShift control plane. It will be deleted at the end of the installation.

- Masters - the core of the control plane. They run the etcd cluster, as well as the core operators. We usually deploy three masters for high availability, because it requires a quorum.

- Infra nodes - the nodes where we run the base services, that we can also consider part of the control plane, such as the image registry. We usually deploy two infrastructure nodes for high availability.

- Compute nodes - the nodes where we run the applications. We usually deploy at least three compute nodes, in order to natively support clustered applications that require a quorum. And these nodes will be deployed on bare metal machines to allow higher density and limit the cost of virtualization.

We also use an additional virtual machine that acts as a bastion. It will also host the infrastructure services that we use to deploy OpenShift. The services are pretty simple and don't consume a lot of resources, so the bastion can be as small as 1 vCPU and 2 GB of memory.

The following table summarizes the machines that we use to deploy OpenShift:

Red Hat Enterprise Linux 8 was recently released, so we'll honor the great engineers behind it by running everything on RHEL 8[b]. We simply download the DVD ISO image from access.redhat.com and install the bastion from it, using the minimal server profile.

During the installation, we configured the network as follows:

- Hostname: bastion.ocp67.example.com

- IP address: 192.168.67.254

- Netmask: 255.255.255.0

- Gateway: 192.168.67.1

- DNS server: 192.168.67.1

- DNS domain: ocp67.example.com

Then, our first step is to register the machine with Red Hat Subscription Manager and enable the base repositories for Red Hat Enterprise Linux 8:

| # subscription-manager register --username=<rhn_username>

# subscription-manager attach --pool=<rhn_pool> # subscription-manager repos --disable='*' \ --enable=rhel-8-for-x86_64-baseos-rpms \ --enable=rhel-8-for-x86_64-appstream-rpms # dnf update -y # reboot |

The bastion is now ready to host the infrastructure services.

DNS

The first service that we need is DNS. If you have a company-wide DNS configuration, you should consider adding the records there. For our deployment though, we provide the steps to install and configure ISC Bind as the DNS server for the ocp4.example.com zone.

First, we install the bind-chroot package:

| # dnf install -y bind-chroot |

We then need to modify the /etc/named.conf file to:

- Listen on the IP address of the bastion, i.e. 192.168.67.254

- Allow queries and recursion for the local network, i.e. 192.168.67.0/24, via an ACL

- Disable DNSSEC validation, because we don't have the full chain of trust

- Associate the forward (ocp67.example.com) and reverse (67.168.192.in-addr-arpa) zones to files

| acl internal_nets { 192.168.67.0/24; };

options { listen-on port 53 { 127.0.0.1; 192.168.67.254; }; listen-on-v6 port 53 { none; }; directory "/var/named"; dump-file "/var/named/data/cache_dump.db"; statistics-file "/var/named/data/named_stats.txt"; memstatistics-file "/var/named/data/named_mem_stats.txt"; secroots-file "/var/named/data/named.secroots"; recursing-file "/var/named/data/named.recursing"; allow-query { localhost; internal_nets; }; recursion yes; allow-recursion { localhost; internal_nets; }; forwarders { 192.168.0.11; 192.168.0.12; }; dnssec-enable yes; dnssec-validation no; managed-keys-directory "/var/named/dynamic"; pid-file "/run/named/named.pid"; session-keyfile "/run/named/session.key"; /* https://fedoraproject.org/wiki/Changes/CryptoPolicy */ include "/etc/crypto-policies/back-ends/bind.config"; }; logging { channel default_debug { file "data/named.run"; severity dynamic; }; }; zone "." IN { type hint; file "named.ca"; }; include "/etc/named.rfc1912.zones"; include "/etc/named.root.key"; zone "ocp67.example.com" { type master; file "ocp67.example.com.zone"; allow-query { any; }; allow-transfer { none; }; allow-update { none; }; }; zone "67.168.192.in-addr.arpa" { type master; file "67.168.192.in-addr.arpa.zone"; allow-query { any; }; allow-transfer { none; }; allow-update { none; }; }; |

We need to create the forward zone file, /var/named/ocp67.example.com.zone, to store the zone records.

| $TTL 900

@ IN SOA bastion.ocp67.example.com. hostmaster.ocp67.example.com. ( 2019062002 1D 1H 1W 3H ) IN NS bastion.ocp67.example.com. bastion IN A 192.168.67.254 bootstrap-0 IN A 192.168.67.253 master-0 IN A 192.168.67.10 master-1 IN A 192.168.67.11 master-2 IN A 192.168.67.12 infnod-0 IN A 192.168.67.20 infnod-1 IN A 192.168.67.21 cptnod-0 IN A 192.168.67.30 cptnod-1 IN A 192.168.67.31 cptnod-2 IN A 192.168.67.32 api IN A 192.168.67.254 api-int IN A 192.168.67.254 apps IN A 192.168.67.254 *.apps IN A 192.168.67.254 etcd-0 IN A 192.168.67.10 etcd-1 IN A 192.168.67.11 etcd-2 IN A 192.168.67.12 _etcd-server-ssl._tcp IN SRV 0 10 2380 etcd-0.ocp67.example.com. IN SRV 0 10 2380 etcd-1.ocp67.example.com. IN SRV 0 10 2380 etcd-2.ocp67.example.com. |

And the reverse zone file, /var/named/67.168.192.in-addr.arpa.zone, contains the PTR records.

| $TTL 900

@ IN SOA bastion.ocp67.example.com. hostmaster.ocp67.example.com. ( 2019062001 1D 1H 1W 3H ) IN NS bastion.ocp67.example.com. 10 IN PTR master-0.ocp67.example.com. 11 IN PTR master-1.ocp67.example.com. 12 IN PTR master-2.ocp67.example.com. 20 IN PTR infnod-0.ocp67.example.com. 21 IN PTR infnod-1.ocp67.example.com. 30 IN PTR cptnod-0.ocp67.example.com. 31 IN PTR cptnod-1.ocp67.example.com. 32 IN PTR cptnod-1.ocp67.example.com. 253 IN PTR bootstrap-0.ocp67.example.com. 254 IN PTR bastion.ocp67.example.com. |

We can now start the named-chroot service:

| # systemctl enable --now named-chroot.service |

And allow the service to be accessed at the firewall level:

| # firewall-cmd --permanent --add-service=dns

# firewall-cmd --reload |

Now that our DNS server is working, we should use it on the bastion as well. To do that, we simply need to update /etc/resolv.conf.

| # echo -e "search ocp67.example.com\nnameserver 192.168.67.254" > /etc/resolv.conf |

TFTP

Depending on the machine, booting from the network will be done via PXE or iPXE. In our lab, the physical servers still rely on PXE. However, it's possible to make them use iPXE by loading a specific image via PXE. To do so, we need a TFTP server to export this image.

The first step is to install the tftp-server package, as well as the iPXE boot images. They are available in RHEL 8 repositories, so we simply use DNF:

| # dnf install -y tftp-server ipxe-bootimgs |

Then, we create the standard tree for the TFTP server and link the image:

| # mkdir -p /var/lib/tftpboot

# ln -s /usr/share/ipxe/undionly.kpxe /var/lib/tftpboot |

We can now start the tftp service:

| # systemctl enable --now tftp.service |

And allow the service to be accessed at the firewall level:

| # firewall-cmd --permanent --add-service=tftp

# firewall-cmd --reload |

DHCP

The next service we need is DHCP. It provides IP addresses to the machine interfaces, based on their MAC addresses, and it also provides the iPXE information. For this service, we install and configure ISC DHCP server. The package is natively available in RHEL 8, so we install it with:

| # dnf install -y dhcp-server |

Then, we create the /etc/dhcp/dhcpd.conf file with static MAC / IP mapping, as well as all the options we want the machines to receive, such as router, DNS server, domain name, etc…

| default-lease-time 900;

max-lease-time 7200; subnet 192.168.67.0 netmask 255.255.255.0 { option routers 192.168.67.1; option subnet-mask 255.255.255.0; option domain-search "ocp67.example.com"; option domain-name-servers 192.168.67.1; next-server 192.168.67.254; if exists user-class and option user-class = "iPXE" { filename "http://bastion.ocp67.example.com:8080/boot.ipxe"; } else { filename "undionly.kpxe"; } } host bootstrap-0 { hardware ethernet 00:1a:4a:16:01:28; fixed-address 192.168.67.253; option host-name "bootstrap-0.ocp67.example.com"; } host master-0 { hardware ethernet 00:1a:4a:16:01:29; fixed-address 192.168.67.10; option host-name "master-0.ocp67.example.com"; } host master-1 { hardware ethernet 00:1a:4a:16:01:2a; fixed-address 192.168.67.11; option host-name "master-1.ocp67.example.com"; } host master-2 { hardware ethernet 00:1a:4a:16:01:2b; fixed-address 192.168.67.12; option host-name "master-2.ocp67.example.com"; } host infnod-0 { hardware ethernet 00:1a:4a:16:01:2c; fixed-address 192.168.67.20; option host-name "infnod-0.ocp67.example.com"; } host infnod-1 { hardware ethernet 00:1a:4a:16:01:2d; fixed-address 192.168.67.21; option host-name "infnod-1.ocp67.example.com"; } host cptnod-0 { hardware ethernet d0:67:e5:a1:34:3e; fixed-address 192.168.67.30; option host-name "cptnod-0.ocp67.example.com"; } host cptnod-1 { hardware ethernet d0:67:e5:e4:87:1e; fixed-address 192.168.67.31; option host-name "cptnod-1.ocp67.example.com"; } host cptnod-2 { hardware ethernet d0:67:e5:8a:b9:1c; fixed-address 192.168.67.32; option host-name "cptnod-2.ocp67.example.com"; } |

Note the statements to configure the PXE / iPXE protocol:

| next-server 192.168.67.1;

if exists user-class and option user-class = "iPXE" { filename "http://bastion.ocp67.example.com:8080/boot.ipxe"; } else { filename "undionly.kpxe"; } |

We specify the TFTP server with next-server. Then we answer differently depending on the protocol used. For iPXE, we provide the URL to the iPXE script provided by matchbox. For PXE, we provide undionly.kpxe, that simply chainloads an iPXE call, emulating the iPXE behavior.

We can now start the dhcpd service:

| # systemctl enable --now dhcpd.service |

And allow the service to be accessed at the firewall level:

| # firewall-cmd --permanent --add-service=dhcp

# firewall-cmd --reload |

Matchbox

According to its documentation, Matchbox is a service that matches machines to profiles to PXE boot and provision Container Linux clusters. And that's exactly what we want because OpenShift 4 is based on Red Hat Enterprise Linux CoreOS.

The installation documentation says that no package for RHEL is available due to Go version conflicts, and that we need to follow the generic deployment method, based on pre-built binaries. So, the first step is to download and extract the latest release: v0.8.0.

| # wget https://github.com/poseidon/matchbox/releases/download/v0.8.0/matchbox-…

# tar -xzf matchbox-v0.8.0-linux-amd64.tar.gz # cd matchbox-v0.8.0-linux-amd64 |

Then we copy the matchbox binary to /usr/local/bin, so that it's available in the PATH. We also copy the example systemd unit in /etc/systemd/system to allow starting matchbox at boot.

| # cp matchbox /usr/local/bin

# cp contrib/systemd/matchbox-local.service /etc/systemd/system/matchbox.service # cd |

We need to create the standard directories that matchbox will look for:

| # mkdir /etc/matchbox

# mkdir -p /var/lib/matchbox/{assets,groups,ignition,profiles} |

And we populate them with the required files for bare metal:

| # cd /var/lib/matchbox/assets

# RHCOS_BASEURL=https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/ # wget ${RHCOS_BASEURL}/4.1/latest/rhcos-4.1.0-x86_64-installer-initramfs.img # wget ${RHCOS_BASEURL}/4.1/latest/rhcos-4.1.0-x86_64-installer-kernel # wget ${RHCOS_BASEURL}/4.1/latest/rhcos-4.1.0-x86_64-metal-bios.raw.gz |

We also create the different profiles we need to deploy OpenShift 4.1: bootstrap, master, infnod and cptnod.

/var/lib/matchbox/profiles/bootstrap.json

| {

"id": "bootstrap", "name": "OCP 4 - Bootstrap", "ignition_id": "bootstrap.ign", "boot": { "kernel": "/assets/rhcos-4.1.0-x86_64-installer-kernel", "initrd": [ "/assets/rhcos-4.1.0-x86_64-installer-initramfs.img" ], "args": [ "ip=dhcp", "rd.neednet=1", "console=tty0", "console=ttyS0", "coreos.inst=yes", "coreos.inst.install_dev=sda", "coreos.inst.image_url=http://bastion.ocp67.example.com:8080/assets/rhcos-4.1.0-x86_64-metal-b…", "coreos.inst.ignition_url=http://bastion.ocp67.example.com:8080/ignition?mac=${mac:hexhyp}" ] } } |

/var/lib/matchbox/profiles/master.json

| {

"id": "master", "name": "OCP 4 - Master", "ignition_id": "master.ign", "boot": { "kernel": "/assets/rhcos-4.1.0-x86_64-installer-kernel", "initrd": [ "/assets/rhcos-4.1.0-x86_64-installer-initramfs.img" ], "args": [ "ip=dhcp", "rd.neednet=1", "console=tty0", "console=ttyS0", "coreos.inst=yes", "coreos.inst.install_dev=sda", "coreos.inst.image_url=http://bastion.ocp67.example.com:8080/assets/rhcos-4.1.0-x86_64-metal-b…", "coreos.inst.ignition_url=http://bastion.ocp67.example.com:8080/ignition?mac=${mac:hexhyp}" ] } } |

/var/lib/matchbox/profiles/infnod.json

| {

"id": "infnod", "name": "OCP 4 - Infrastructure Node", "ignition_id": "worker.ign", "boot": { "kernel": "/assets/rhcos-4.1.0-x86_64-installer-kernel", "initrd": [ "/assets/rhcos-4.1.0-x86_64-installer-initramfs.img" ], "args": [ "ip=dhcp", "rd.neednet=1", "console=tty0", "console=ttyS0", "coreos.inst=yes", "coreos.inst.install_dev=sda", "coreos.inst.image_url=http://bastion.ocp67.example.com:8080/assets/rhcos-4.1.0-x86_64-metal-b…", "coreos.inst.ignition_url=http://bastion.ocp67.example.com:8080/ignition?mac=${mac:hexhyp}" ] } } |

/var/lib/matchbox/profiles/cptnod.json

| {

"id": "cptnod", "name": "OCP 4 - Compute Node", "ignition_id": "worker.ign", "boot": { "kernel": "/assets/rhcos-4.1.0-x86_64-installer-kernel", "initrd": [ "/assets/rhcos-4.1.0-x86_64-installer-initramfs.img" ], "args": [ "ip=dhcp", "rd.neednet=1", "console=tty0", "console=ttyS0", "coreos.inst=yes", "coreos.inst.install_dev=sda", "coreos.inst.image_url=http://bastion.ocp67.example.com:8080/assets/rhcos-4.1.0-x86_64-metal-b…", "coreos.inst.ignition_url=http://bastion.ocp67.example.com:8080/ignition?mac=${mac:hexhyp}" ] } } |

And we also create the groups files to associate the MAC addresses of the machines to the profiles, i.e. associate the machines to their role in the OpenShift 4.1 deployment.

/var/lib/matchbox/groups/bootstrap-0.json

| {

"id": "bootstrap-0", "name": "OCP 4 - Bootstrap server", "profile": "bootstrap", "selector": { "mac": "00:1a:4a:16:01:28" } } |

/var/lib/matchbox/groups/master-0.json

| {

"id": "master-0", "name": "OCP 4 - Master 1", "profile": "master", "selector": { "mac": "00:1a:4a:16:01:29" } } |

/var/lib/matchbox/groups/master-1.json

| {

"id": "master-1", "name": "OCP 4 - Master 2", "profile": "master", "selector": { "mac": "00:1a:4a:16:01:2a" } } |

/var/lib/matchbox/groups/master-2.json

| {

"id": "master-2", "name": "OCP 4 - Master 3", "profile": "master", "selector": { "mac": "00:1a:4a:16:01:2b" } } |

/var/lib/matchbox/groups/infnod-0.json

| {

"id": "infnod-0", "name": "OCP 4 - Infrastructure Node #1", "profile": "infnod", "selector": { "mac": "00:1a:4a:16:01:2c" } } |

/var/lib/matchbox/groups/infnod-1.json

| {

"id": "infnod-1", "name": "OCP 4 - Infrastructure node #2", "profile": "infnod", "selector": { "mac": "00:1a:4a:16:01:2d" } } |

/var/lib/matchbox/groups/cptnod-0.json

| {

"id": "cptnod-0", "name": "OCP 4 - Compute node #1", "profile": "cptnod", "selector": { "mac": "d0:67:e5:a1:34:3e" } } |

/var/lib/matchbox/groups/cptnod-1.json

| {

"id": "cptnod-1", "name": "OCP 4 - Compute node #2", "profile": "cptnod", "selector": { "mac": "d0:67:e5:e4:87:1e" } } |

/var/lib/matchbox/groups/cptnod-2.json

| {

"id": "cptnod-2", "name": "OCP 4 - Compute node #3", "profile": "cptnod", "selector": { "mac": "d0:67:e5:8a:b9:1c" } } |

The matchbox binary expects to run as matchbox user, so we create it:

| # useradd -U -r matchbox |

We can now start the matchbox service:

| # systemctl daemon-reload

# systemctl enable --now matchbox.service |

And allow the service to be accessed at the firewall level:

| # firewall-cmd --permanent --add-port 8080/tcp

# firewall-cmd --reload |

HAProxy

The OpenShift 4.1 control plane provides 2 services: API and router. The API allows the clients to interact with the cluster, and the router allows access to the application hosted on the cluster. Among these applications is the OpenShift Console, for example.

The control plane is deployed in a highly available configuration with 3 masters and 2 infrastructure nodes, so we need a load balancer to redirect the traffic to the different nodes, depending on the status. HAProxy is provided with RHEL 8, so we use it:

| # dnf install -y haproxy |

We now need to configure it via /etc/haproxy/haproxy.cfg.

| global

log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats ssl-default-bind-ciphers PROFILE=SYSTEM ssl-default-server-ciphers PROFILE=SYSTEM defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 frontend ocp4-kubernetes-api-server mode tcp option tcplog bind api.ocp67.example.com:6443 default_backend ocp4-kubernetes-api-server frontend ocp4-machine-config-server mode tcp option tcplog bind api.ocp67.example.com:22623 default_backend ocp4-machine-config-server frontend ocp4-router-http mode tcp option tcplog bind apps.ocp67.example.com:80 default_backend ocp4-router-http frontend ocp4-router-https mode tcp option tcplog bind apps.ocp67.example.com:443 default_backend ocp4-router-https backend ocp4-kubernetes-api-server mode tcp balance source server boostrap-0 bootstrap-0.ocp67.example.com:6443 check server master-0 master-0.ocp67.example.com:6443 check server master-1 master-1.ocp67.example.com:6443 check server master-2 master-2.ocp67.example.com:6443 check backend ocp4-machine-config-server mode tcp balance source server bootstrap-0 bootstrap-0.ocp67.example.com:22623 check server master-0 master-0.ocp67.example.com:22623 check server master-1 master-1.ocp67.example.com:22623 check server master-2 master-2.ocp67.example.com:22623 check backend ocp4-router-http mode tcp server infnod-0 infnod-0.ocp67.example.com:80 check server infnod-1 infnod-1.ocp67.example.com:80 check backend ocp4-router-https mode tcp server infnod-0 infnod-0.ocp67.example.com:443 check server infnod-1 infnod-1.ocp67.example.com:443 check |

We also need to enable an SELinux boolean to allow HAProxy to bind to non standard ports for the API frontends.

| # setsebool -P haproxy_connect_any on |

We can now start the haproxy service:

| # systemctl enable --now haproxy.service |

And allow the service to be accessed at the firewall level:

| # firewall-cmd --permanent --add-service http

# firewall-cmd --permanent --add-service http # firewall-cmd --permanent --add-port 6443/tcp # firewall-cmd --permanent --add-port 22623/tcp # firewall-cmd --reload |

This configuration is enough for a lab, but in production we would use a highly available configuration with keepalived.

Deploying OpenShift Container Platform 4.1

We're now ready to deploy OpenShift 4.1. There are a few steps to follow, but it's pretty straightforward now that the base services are running.

SSH keypair

In order to follow the configuration, or simply to do maintenance actions on the different machines of our cluster, we will need to connect via SSH. The ignition process allows us to import an SSH public key in /core/.ssh/authorized_keys when we create the machine. So, we create a new SSH key pair on the bastion:

| # ssh-keygen -t rsa -b 2048 -N '' -f /root/.ssh/id_rsa |

Pull secret

We also need to get our secret that will be used to pull the images needed to run OpenShift. This pull secret can be retrieved from https://cloud.redhat.com/openshift/install/metal/user-provisioned. On this page, we find a "Download Pull Secret" button that will store the file on your machine. We recommend keeping it, as we may need it for other OpenShift clusters. In the following steps, we consider that the pull secret is in /root/ocp4_pull_secret on the bastion.

Installation configuration

The OpenShift installation mainly relies on the ignition process that configures the machines with their appropriate roles. Creating the ignition files can be really painful and it is also error prone. Fortunately, there is a tool to generate these file: openshift-install. To install it, we simply download it from the OpenShift mirror:

| # OCP4_BASEURL=https://mirror.openshift.com/pub/openshift-v4/clients/ocp/latest

# curl -s ${OCP4_BASEURL}/openshift-install-linux-4.1.3.tar.gz \ | tar -xzf - -C /usr/local/bin/ openshift-install |

Later, we will also need the oc command to interact with our cluster. We download it from the same mirror:

| # curl -s ${OCP4_BASEURL}/openshift-client-linux-4.1.3.tar.gz \

| tar -xzf - -C /usr/local/bin/ oc |

The openshift-install command requires a work directory to store the assets it generates:

| # mkdir /root/ocp67 |

The openshift-install command is able to work in interactive or unattended modes. To be able to deploy on UPI, we need to use the unattended mode and provide an answer file that will be used to generate the assets. The file has to be named install-config.yaml and exists in the work directory. Here is the file we use:

| ---

apiVersion: v1 baseDomain: example.com compute: - hyperthreading: Enabled name: worker replicas: 0 controlPlane: hyperthreading: Enabled name: master replicas: 3 metadata: name: ocp67 networking: clusterNetworks: - cidr: 10.128.0.0/14 hostPrefix: 23 networkType: OpenShiftSDN serviceNetwork: - 172.30.0.0/16 platform: none: {} pullSecret: '<the_content_of_/root/ocp4_pull_secret>' sshKey: '<the_content_of_/root/.ssh/id_rsa.pub>' |

We can now use openshift-install to generate the ignition files:

| # openshift-install --dir=/root/ocp67 create ignition-configs |

The work directory now contains the ignition files that we already reference in the matchbox configuration. We simply need to copy them into the matchbox directory:

| # cp /root/ocp67/*.ign /var/lib/matchbox/ignition |

We can now boot the virtual machines in PXE mode, in no specific order. When booting the machines use the ignition configuration to install the cluster. We can monitor the deployment via openshift-install command. It checks that the initial cluster operators are available:

| # openshift-install --dir=/root/ocp67 wait-for bootstrap-complete --log-level debug |

While the installation proceeds, we can collect the Kubernetes configuration file generated by openshift-install, at the same time as the ignition files.

| # mkdir /root/.kube

# cp /root/ocp67/auth/kubeconfig /root/.kube/config |

This file contains the credentials to connect to the API. And we will use them to configure the storage for the image registry. During the deployment, no storage backend is specified to allow us to set what we want. In production, we would select a resilient storage solution. As this is not the main topic of this article, we will stick to ephemeral storage, simply by configuring an empty directory.

| # oc patch configs.imageregistry.operator.openshift.io cluster \

--type merge \ --patch '{"spec":{"storage":{"emptyDir":{}}}}' |

After some time, the image-registry cluster operator will be available. We can now validate that the deployment is complete. For that, we can again use openshift-install:

| # openshift-install --dir=/root/ocp67 wait-for install-complete --log-level debug |

We now have a fully working control plane with infrastructure nodes. We can access the OpenShift console via the following link: https://console-openshift-console.apps.ocp67.example.com. To login, the username is kubeadmin and we can find the password in /root/ocp67/auth/kubeadmin-password.

We can now delete the bootstrap node, because it is only used during the initialization phase. We can also remove it from the HAProxy backend pools.

Bare metal nodes

Now that our OpenShift environment is up, we can deploy the bare metal compute nodes, as they will be used to run the applications. This is pretty straightforward. The native VLAN on the main network interface is the same as the one connected to the bastion's interface. That ensures that DHCP will work.

To make the bare metal machines boot via PXE, we need to configure them. Here, we only show the basic steps that are run as IPMI commands. Depending on the hardware vendor, there can be other steps required to perform to enable PXE on certain interfaces that are not available via IPMI commands.

To be able to send IPMI commands, we need to install ipmitool. It is part of RHEL 8.

| # dnf install ipmitool |

Then, we need to tell each bare metal machine to boot over PXE during the next boot. This is done by setting the chassis bootdev to pxe. And finally, we reset the power state of the bare metal machines.

| # for i in 192.168.101.3{0,1,2}; do

Ipmitool -I lanplus -H ${i} -U admin chassis bootdev pxe ipmitool -I lanplus -H ${i} -U admin chassis power cycle done |

The compute nodes will boot via PXE and install the required components. A few minutes later, they will appear in the list of nodes and we will be able to deploy an application. The service catalog provides some great examples to start with.