Today, Pure Storage is excited to announce Pure Service Orchestrator. It is now possible to deliver container storage-as-a-service to empower your developers to build and deploy scale-out, microservices applications. The agility that your developers expect they could only get from the public cloud is now possible, on premise!

In this blog, we’ll discuss why the adoption of containers is exploding, how the the lack of persistent storage threatens to slow adoption, and why a newer, smarter approach to storage delivery for containerized application environments is needed.

Before you go further, you might want to checkout our light board video on Pure Service Orchestrator.

We live in a world of rapidly changing applications

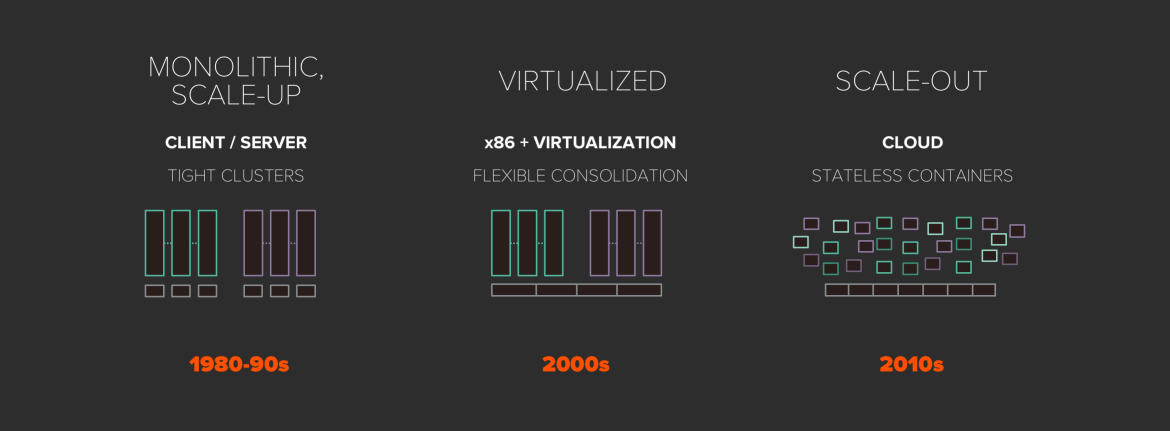

Businesses are rapidly moving from monolithic, scale-up applications to virtualized applications and now to scale-out, microservices applications. These new applications are typically built on containers.

Why containers?

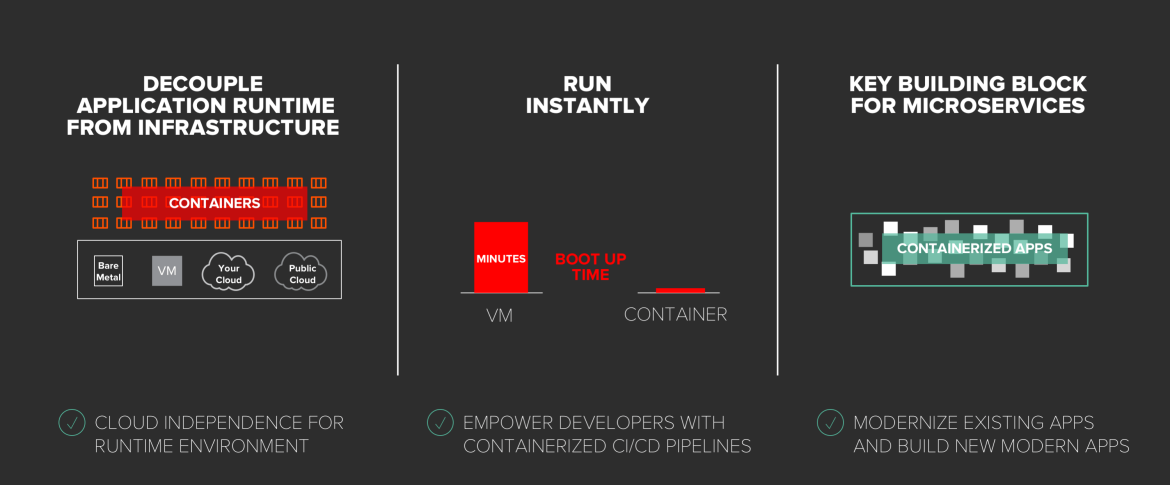

Containers are lightweight, compact and flexible runtime environments that package together your application code and all its dependencies, and decouple application runtime from infrastructure. This decoupling enables portability. Because they are lightweight, containers boot up and run instantly facilitating scalability. Additionally, containers allow greater modularity which makes them an essential building block for microservices.

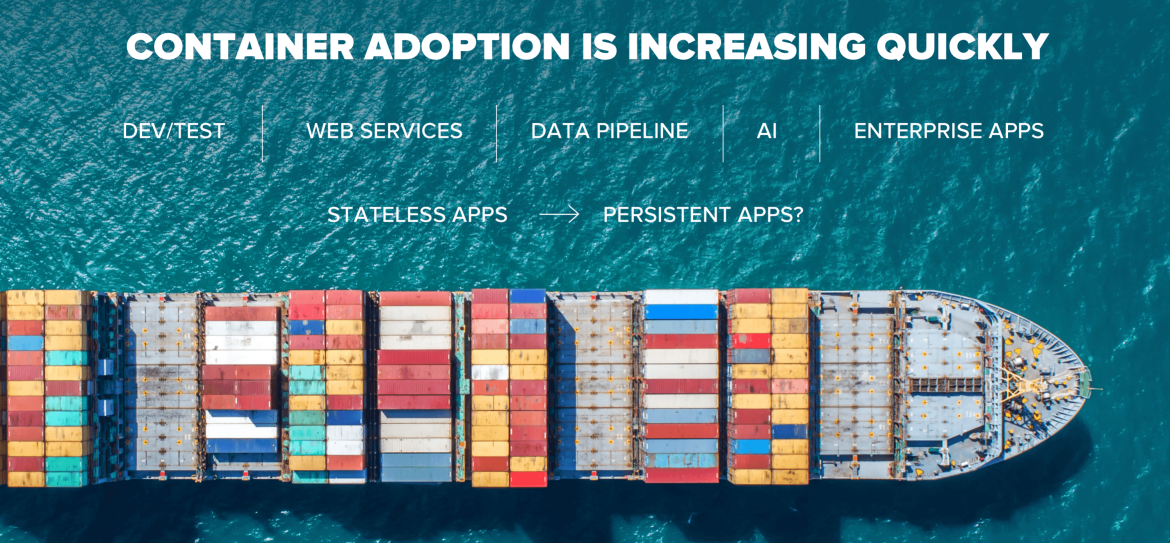

No wonder container adoption is exploding. IDC predicts that by 2021, 80% of application development on cloud platforms will use microservices and cloud functions, and over 95% of new microservices will be deployed in containers[1].

Initial adoption of containers is showing up mostly in dev/test, web services, data pipelines, and stateless applications. But the lack of persistent storage support threatens to limit adoption of containers for mission-critical, persistent applications.

Pure’s journey to container storage-as-a-service

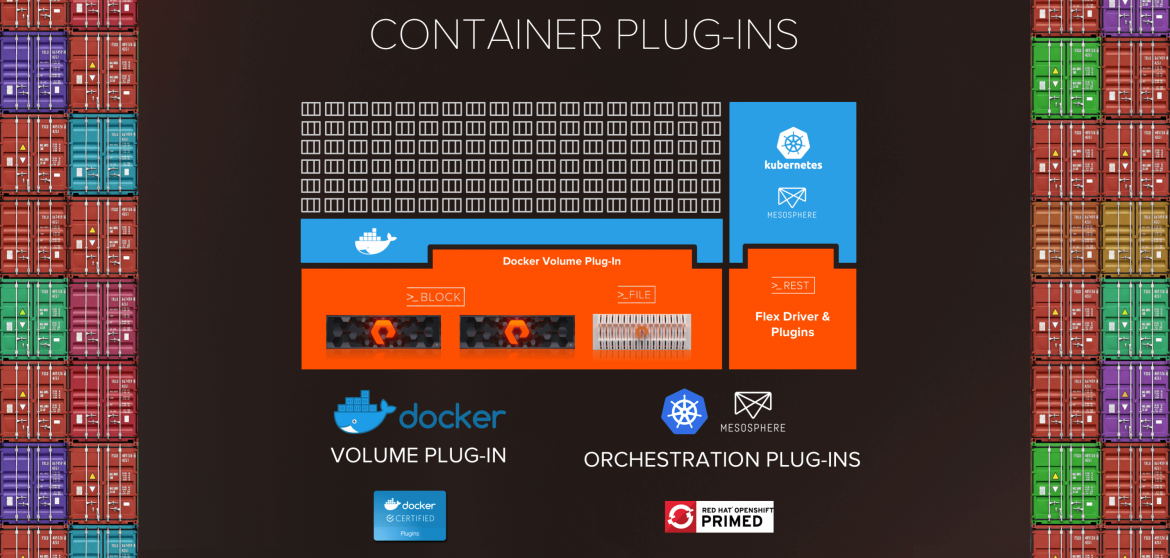

In 2017, our New Stack team kicked this solution off and built seamless integration with container platforms and orchestration engines via plugins for Docker, Kubernetes FlexVolume Driver and more, so you can leverage persistent storage support in containerized environments.

This was only the beginning!

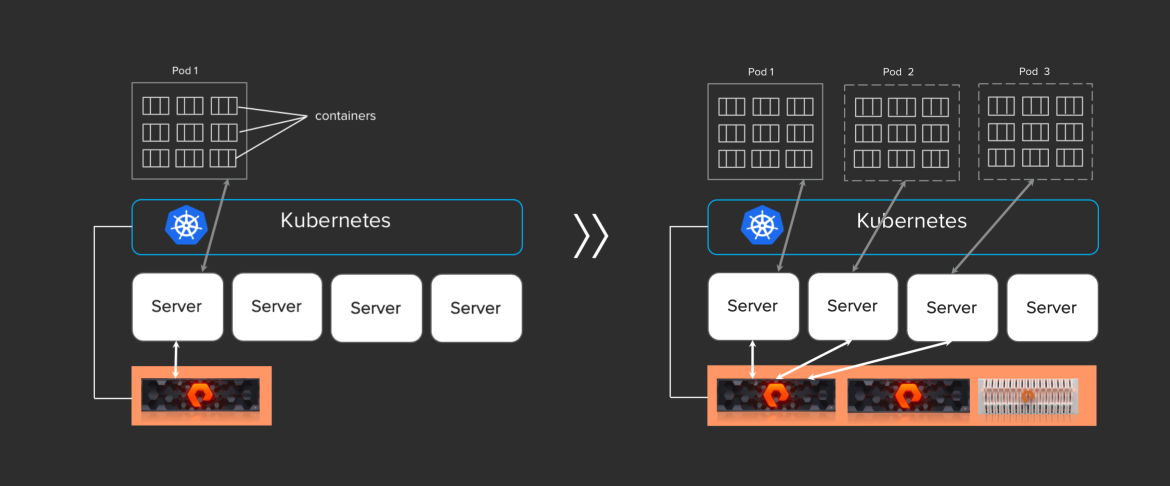

We quickly realized that device plugins alone are not enough to deliver the cloud experience your developers expect today. This gap is amplified by the highly fluid nature of containerized environments. Stateless containers are spun up and spun down within seconds while stateful containers live a lot longer. Some containers require block while others want file storage. And, your container environment can rapidly scale to 1000s of containers that can push the boundaries of any single storage system. How do you handle storage provisioning and scaling for such a dynamic environment?

What if you could truly deliver a storage-as-a-service experience to your containerized application environment?

Here’s what we thought a seamless container storage-as-a-service should look like:

- Simple, Automated & Integrated: Provisions storage on demand automatically via policy, and integrates seamlessly into the container orchestration frameworks, enabling DevOps and Developer friendly ways to consume storage.

- Elastic: Enables you to start small and scale storage environment with ease and flexibility of mixing and matching varied configurations as your container environment grows.

- Multi-protocol: Support for file, block and object.

- Enterprise-grade: Delivers the same Tier1 resiliency, reliability, and protection that your mission-critical applications depend upon, now extended for stateful applications in container environments.

- Shared: Makes shared storage a viable (and preferred) architectural choice for next generation, containerized data centers thru delivering a vastly superior experience relative to direct-attached storage alternatives.

Introducing Pure Service Orchestrator

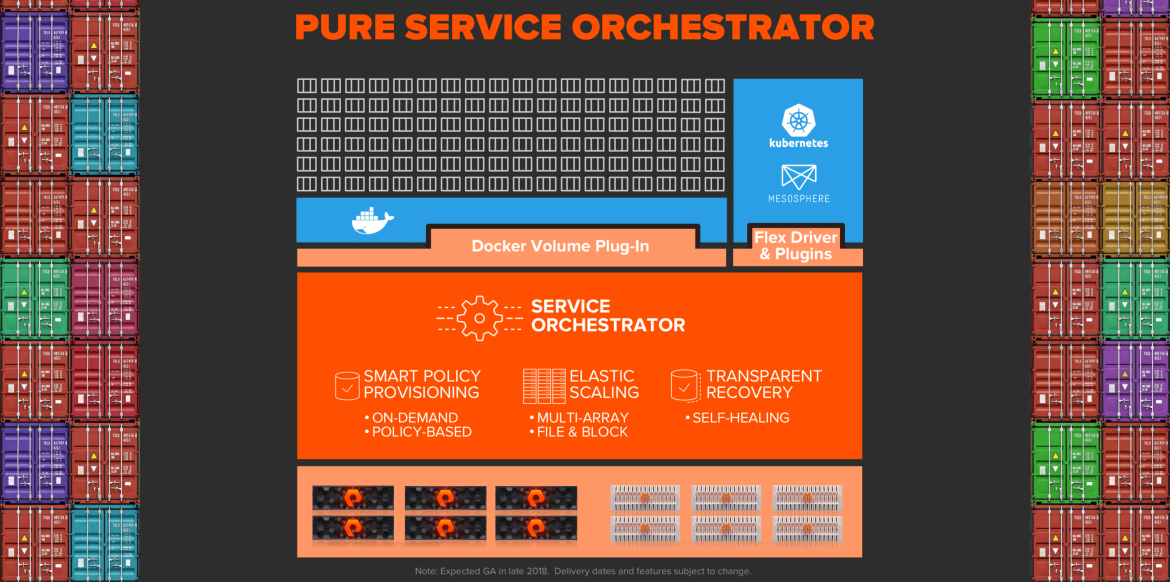

Pure Service Orchestrator is designed to provide a similar experience to your developers that they expect they can only get from the public cloud. Service Orchestrator integrates seamlessly with the container orchestration frameworks (Kubernetes) and functions as the control plane virtualization layer that enables your containerized environment to move away from consuming storage-as-a-device to consuming storage-as-a-service:

- Smart Policy Provisioning: Delivers storage on demand via policy. Service Orchestrator makes the best provisioning decision for each storage request by assessing multiple factors such as performance load, capacity utilization, and health of a given storage system in real-time. Building upon the effortless foundation of Pure Storage, Service Orchestrator delivers an effortless experience and frees the Container Admin from writing complex pod definitions. For example, the default storage requests to Service Orchestrator are as simple as specifying the storage size. No size specified, no problem! Service Orchestrator will service those requests too.

- Elastic Scaling: Scales container storage across multiple FlashArray, FlashBlade™ or mix of both systems, supports mix of file and block, and delivers the flexibility to have varied configurations for each storage system that is part of the service. With the astonishing ease of expanding your storage service with a single command, you can start small and scale storage seamlessly and quickly as the needs of your containerized environments grow.

- Transparent Recovery: Self-heals to ensure your services stay robust. For example, Service Orchestrator prevents accidental data corruption by ensuring a storage volume is bound to a single persistent volume claim at any given time. If Kubernetes master sends a request to attach the same volume to another Kubernetes node, Service Orchestrator will disconnect the volume from the original Kubernetes node first before attaching it to a new Kubernetes node. This behavior ensures that if a Kubernetes cluster “split-brain” condition occurs (where the Kubernetes master and node become out of sync due to loss of communication), simultaneous I/O to the same storage volume which can corrupt data is prevented.

Now that you know what Pure Service Orchestrator is capable of, you can see it in action here:

Extending Beyond Containers to Platform-as-a-Service

Many developers are demanding to go beyond containers as-a-service to platform-as-a-service. We took care of that as well! Check out this blogpost to learn about our reference architecture for all-flash, on-prem platform-as-a-service with Red Hat OpenShift.

Combining Service Experience with Service Consumption

Of course, delivering a true cloud experience demands not only cloud architecture, but also a cloud consumption model, and we have some exciting announcements in this area too. Checkout our ES2 (Evergreen Storage Service) blog to learn more.

The Future Is Exciting

Pure Service Orchestrator is just getting started. Over time, it will continue to get smarter and more effortless. We’re building a world-class New Stack team that is thinking outside-the-box and working on delivering innovative new capabilities with each release, beginning with the initial release of Service Orchestrator in late 2018. We couldn’t be more excited!

Summary

Now, you can deliver container storage as-a-service (even PaaS)! Effortlessly extend your infrastructure beyond existing scale-up and virtualized applications to support containerized, persistent applications – all on Shared Accelerated Storage infrastructure. That’s the power of a Data-Centric Architecture!

About the author

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Developer resources

- Customer support

- Red Hat value calculator

- Red Hat Ecosystem Catalog

- Find a partner

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit