Red Hat blog

In a previous post, we saw how to leverage Istio Multicluster to deploy an application (bookinfo) on multiple Red Hat OpenShift clusters and apply mesh policies on all of the deployed services.

We also saw that the deployment process was relatively complex. In this post we are going to see how Federation V2 can help simplify the process of deploying an application to multiple clusters.

Federation V2

Federation V2, as the name suggests, is the evolution of the Kubernetes Federation initiative, after the project was rebooted around the beginning of 2018.

Federation V2 narrows the initial scope of Federation and focuses on creating a sophisticated and generic placing engine that, based on policies, is able to make decisions on whether and where to place arbitrary Kubernetes API objects.

Federation V2 comes with a set of API objects pre-configured for federation, but others can be added. This is a significant enhancement over V1 in which the set of federated objects was hardwired in the code and the federation project itself was always catching up with the Kubernetes code base.

With the new model this problem goes away and we can also federate CRDs.

Another important change since V1 is that federation V2 does not have a dedicated API endpoint; V2 reuses the master API endpoint. One cluster needs to be elected to be the main federation cluster and in this cluster we need to install two components:

- A cluster registry, which holds a record for every cluster that is known.

- The federation controller, which manages the federated clusters (clusters that exist in the cluster registry are not necessarily federated) and the federated resources.

Federation V2 also has some initial features around creating DNS records for inbound traffic to the set of federated clusters. Inbound traffic routing is out of scope for this blog so we are not going to cover this feature.

Federated Resources

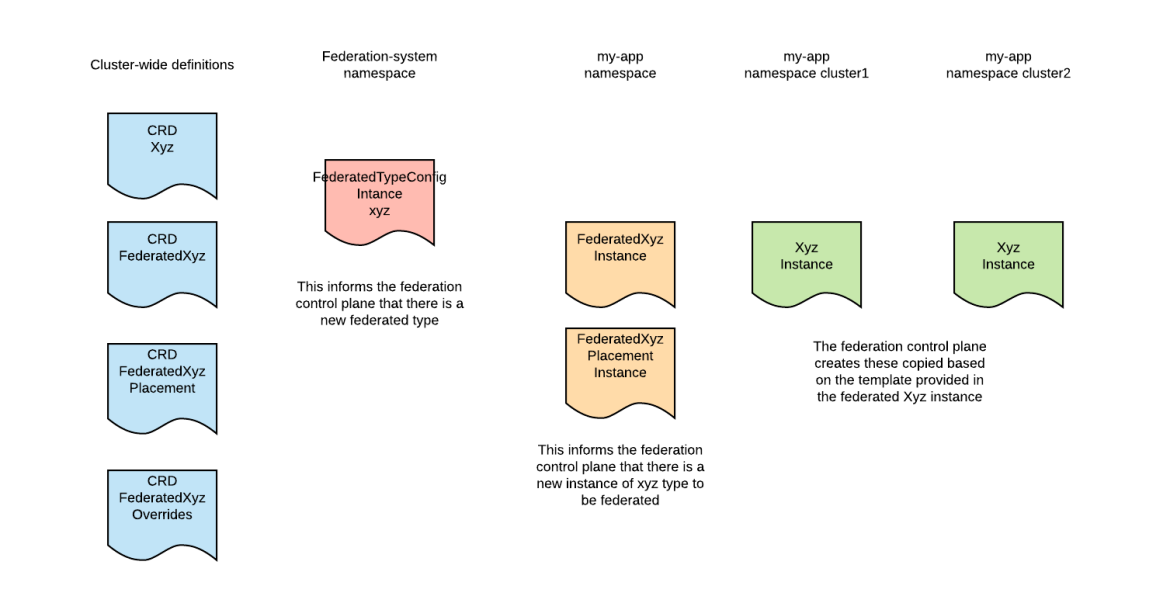

In order to federate a resource the following structure must be in place:

From the picture we can see the following:

A Kubernetes core type or CRD type that we want to federate. Let’s call it XYZ type in this example.

A FederatedXYZ CRD: Instances of this CRD are used to express the intention to federate (propagate) a XYZ instance.

A FederatedXYZPlacement CRD: Instances of this type are used to describe in which of the federated clusters the resource should be propagated.

A FederatedXYZOverride CRD: Instances of this type can be used to override certain values of the XYZ instance template for certain clusters.

An instance of the FederatedType CRD: This instance will tie the previous types together, informing the federation system of a new federated type. It also has addition settings on how the federation should occur.

Once all of this is in place, if we create an instance of the FederatedXYZ type in a federated namespace, the federation control plane will create the relative XYZ instances in the federated clusters.

Federation V2 also supports automatically creating the federated resources from an initially type using the CLI. A naming convention is used to guarantee no name clashes.

Adding a custom federated resource

The main Kubernetes core objects (namespaces, configmaps, deployments, ingresses, jobs, replicasets, secrets, services, serviceaccounts) come out of the box with the Federation v2 installation.

However, in the example app that we are going to deploy below we need an additional type. If you recall from the previous post, we need an instance of the Certificate CRD.

Here is how we can extend federation to replicate this type also.

The certificate CRD looks as follows (here and in the below yamk snippets, only the relevant parts are showed):

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: certificates.certmanager.k8s.io

spec:

...

group: certmanager.k8s.io

names:

kind: Certificate

listKind: CertificateList

...

scope: Namespaced

version: v1alpha1

...

The Federated certificate CRD will look as follows:

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: federatedcertificates.certmanager.k8s.io

spec:

group: certmanager.k8s.io

names:

kind: FederatedCertificate

plural: federatedcertificates

scope: Namespaced

version: v1alpha1

The Federated certificate placement looks as follows:

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: federatedcertificateplacements.certmanager.k8s.io

spec:

group: certmanager.k8s.io

names:

kind: FederatedCertificatePlacement

plural: federatedcertificateplacements

scope: Namespaced

...

version: v1alpha1

For brevity we are omitting the placement type, you can find it here.

The federated type instance that ties it all together looks as follows:

apiVersion: core.federation.k8s.io/v1alpha1

kind: FederatedTypeConfig

metadata:

name: certificates.certmanager.k8s.io

namespace: federation-system

spec:

target:

version: v1alpha1

kind: Certificate

namespaced: true

comparisonField: Generation

propagationEnabled: true

template:

group: certmanager.k8s.io

version: v1alpha1

kind: FederatedCertificate

placement:

kind: FederatedCertificatePlacement

override:

kind: FederatedCertificateOverride

overridePath:

- spec.dnsNames

Notice that all the previous types are referenced, so that the federation control plane knows how to interpret groups of these objects. Also, notice the last line. Here we are informing the federation control plane that the only thing that makes sense to be overridden on the certificate object is the dnsName field.

An instance of the federated certificate CRD will look as follows:

apiVersion: certmanager.k8s.io/v1alpha1

kind: FederatedCertificate

metadata:

name: bookinfo-ingressgateway-crt

spec:

template:

spec:

secretName: istio-ingressgateway-certs

dnsNames:

- bookinfo.exmaple.com

issuerRef:

name: selfsigning-issuer

kind: ClusterIssuer

If the previous configurations were applied correctly this definition will trigger the federation control-plane to create a certificate object in all the federated clusters.

Deploying Federation v2

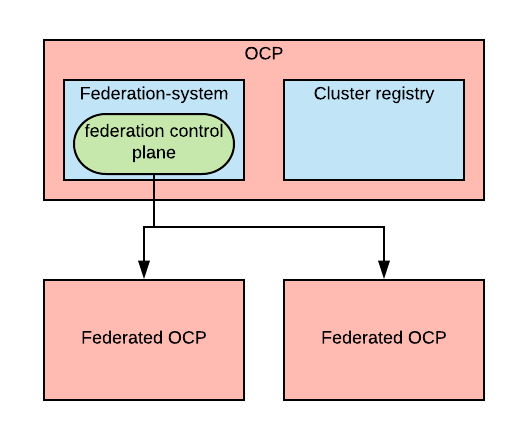

Federation V2 has a relatively simple architecture. It consists of one controller deployed in the federation-system namespace.

In order to register clusters to the federation control plane, the clusters must exist in the cluster registry. The cluster registry simply consists of a namespace (kube-multicluster-public) and a new Cluster CRD that can be used to describe a cluster. To add clusters to the cluster registry, one can simply create a new Cluster object in the kube-multicluster-public namespace.

Once a cluster in known to the cluster registry, it can be federated using the Federation V2 CLI.

You can find an ansible playbook that automates these steps here.

As one can easily deduct from the picture, if the OCP cluster where the federation controller resides goes down, we lose the federation features. This doesn’t mean that our applications are down, they will keep running, but our ability to operate them will be temporarily hampered.

We have seen this same issue with the istio-multicluster control plane. I think there is a lot to learn in this space in order to build disaster resilient architectures.

Deploying bookinfo

Once federation is correctly installed, we can finally start deploying applications with it.

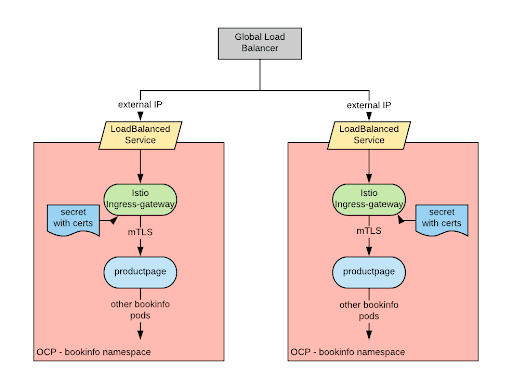

As an example, we are going to deploy the bookinfo app.

We are also going to assume that you have configured Istio multicluster; one way to do it is to follow these instructions.

If you recall from the Istio multicluster post, we saw that deploying an application to multiple cluster was relatively complex and we had to use an Ansible Playbook.

Thanks to Federation V2, we can now greatly simplify the deployment process. We are going to use a Helm chart to deploy the federated bookinfo app. This is an example of how it can be deployed with a single command:

<span>helm template example/bookinfo/charts/federated-bookinfo --set zipkin_address=$ZIPKIN_SVC_IP,statsd_address=$STATSD_SVC_IP,pilot_address=$PILOT_SVC_IP,bookinfo_domain=$bookinfo_domain,cluster_array='[raffa1, raffa2]' --namespace bookinfo | oc --context $FED_CLUSTER apply -f - -n bookinfo</span>

For more details refer here, where you can also find some one-time preparation steps needed before being able to deploy.

The resulting architecture for the deployed app is exactly the same as for the istio-multicluster post:

Again, for now, we are not addressing the problem of how to create a global load balancer to direct the traffic to the various clusters.

Elevating the level of abstraction of the core kubernetes objects

If you ran the previous command, you may have noticed that the output looks like the following:

destinationrule.networking.istio.io "reviews" created

destinationrule.networking.istio.io "ratings" created

destinationrule.networking.istio.io "details" created

destinationrule.networking.istio.io "productpage" created

policy.authentication.istio.io "mtls-bookinfo" created

gateway.networking.istio.io "bookinfo-gateway" created

virtualservice.networking.istio.io "bookinfo" created

federateddeployment.core.federation.k8s.io "bookinfo-ingressgateway" created

federateddeploymentplacement.core.federation.k8s.io "bookinfo-ingressgateway" created

federatedserviceaccount.core.federation.k8s.io "istio-ingressgateway-service-account" created

federatedserviceaccountplacement.core.federation.k8s.io "istio-ingressgateway-service-account" created

federatedservice.core.federation.k8s.io "bookinfo-ingressgateway" created

federatedserviceplacement.core.federation.k8s.io "bookinfo-ingressgateway" created

Note that there are no core Kubernetes resource being created. We are only creating CRD instances. Realizing this was an “Aha!” moment for me. I think this is a trend that we are going to see more and more in the future. What is happening is that we are elevating the level of abstraction that we are operating at, by creating more sophisticated and featureful objects as opposed to the core Kubernetes ones.

Through projects and initiatives like Istio, Knative and operators, we are going to move from the very IaaS-oriented Kubernetes objects towards more complex concepts closer to developers’ conceptions of their applications and the processes around them (CI/CD and the like). Federation V2 is also there in that mix, although Federation elevates the level of abstraction in a different way: it automatically propagates whatever objects you create.

Conclusion

This was just a taste of Federation V2 to demonstrate how it can simplify deployments of an application on multiple cluster. I believe that as large customer continue to adopt OpenShift and create a larger and larger deployments, federation V2 is going to play a growing role in the multicluster/hybrid cloud story for OpenShift. I am just back from Kubecon NA 2018 and I believe that the community behind the Federation V2 project is strong and that the project seems to be headed in the right direction.

About the author

Raffaele is a full-stack enterprise architect with 20+ years of experience. Raffaele started his career in Italy as a Java Architect then gradually moved to Integration Architect and then Enterprise Architect. Later he moved to the United States to eventually become an OpenShift Architect for Red Hat consulting services, acquiring, in the process, knowledge of the infrastructure side of IT.