The beauty of open source development is in the numerous amazing opportunities for collaboration that occur upstream and the ability to build on each other's work. Last fall I blogged about how Red Hat was working with Google and others to standardize container orchestration and management with Kubernetes in OpenShift v3. In this post, I wanted to cover what’s happened since then and talk about specific areas of Kubernetes that Red Hat has been working on, as we bring this capability to enterprise users.

In the Beginning, There was Borg

Over the past week, Google released details on the lineage of the Kubernetes project which is traced from Borg, the “predecessor to Kubernetes”. When Kubernetes was launched in 2014, Google discussed how “everything at Google runs in a container” to support their various service offerings and made news when they revealed that they were starting “over 2 billion containers per week”. Borg is what has made this all possible.

Borg is Google’s internal container cluster-management system. It has been referred to as “Google’s secret weapon”, the details of which have been closely held but have served as inspiration for other solutions. At the Eurosys 2015 conference last week, Google released for the first time a detailed academic paper on Borg, a “cluster manager that runs hundreds of thousands of jobs, from many thousands of different applications, across a number of clusters each with up to tens of thousands of machines.”

Many of the Google developers that Red Hat collaborates with today in the Kubernetes community were previously developers on the Borg project. As we work with Google and others to bring Kubernetes to enterprise users, we benefit greatly from their experience as they bring the best ideas from Borg into Kubernetes and learn from its shortcomings.

Google and Red Hat

Google has been a key Red Hat partner as we’ve brought products like Red Hat Enterprise Linux and more to the Google Cloud Platform, through our Certified Cloud Provider Program. In early 2014, we decided to reach out to Google to talk about our container strategy and discuss our respective involvement in the Docker open source project. Both Google and Red Hat have a long history of contributing to Linux Containers development. On the Red Hat side, we introduced support for control groups (cgroups) and kernel namespaces (the underpinnings of Linux containers) in Red Hat Enterprise Linux 6 in November 2010 and have leveraged containers as the deployment model in OpenShift since its inception in 2011. Red Hat works on these technologies in the upstream Linux communities long before they appear in our commercial products. Not surprisingly Google and Red Hat are among the top contributors to the Docker project.

During those early calls, we were both surprised and extremely excited to learn about Google’s interest in going beyond Docker, to open source their technology for container orchestration and management. This was something that the OpenShift team had already started working on upstream, as we initiated development on OpenShift 3. So the opportunity to collaborate with Google on this was appealing.

When Google ultimately did launch the Kubernetes project, Red Hat was among the first to join. Today, Red Hat is the second leading contributor to Kubernetes after Google, just as we are the second leading contributor to the Docker project after Docker Inc. We were recently recognized as a Google partner of the year for our collaboration in Kubernetes and many other areas.

Building on Kubernetes

Over the past year, Red Hat has contributed substantially to Kubernetes in various areas, as we work to bring the concepts of atomic, immutable infrastructure to enterprise customers in products like OpenShift, Red Hat Enterprise Linux and RHEL Atomic Host. I would like to briefly outline some of that work here and recognize some of the people that are making it possible. We will continue expanding on these topics in future posts, as well as dive into the new application development and lifecycle management features in OpenShift 3 that are enabled by this core container infrastructure.

1. Kubectl API & User Interfaces

The kubectl REST API is a component of the Kubernetes master server instances and controls the Kubernetes cluster manager, which manages the node server instances where application containers run. As OpenShift’s lead architect and leading contributor to Kubernetes, Clayton Coleman has contributed to the development of the API and additional OpenShift user interfaces that plug into it, along with many others on the Red Hat team. OpenShift 3 users will be able to leverage Web, Command Line and IDE interfaces or call kubectl directly to interact with the platform. OpenShift users can also leverage remote command execution in a container and port forwarding to pods for debugging, developed by Andy Goldstein.

2. Namespaces

Kubernetes namespaces allow different projects, users and teams to share a Kubernetes cluster. One of the key characteristics of OpenShift has been the ability for multiple application containers, from different users and teams, to run in a shared cluster of Linux container hosts. Red Hat’s Derek Carr and Clayton Coleman worked extensively with Google and other community members to bring this feature to Kubernetes. In OpenShift 3 (currently in Beta), we build on Kubernetes namespaces to implement OpenShift projects, that can be mapped to users and teams to enable collaboration on joint development.

3. Resource Quotas

Related to namespaces is the concept of resource quotas, which Derek has been leading the way on. Quotas limit both the number of objects created in a namespace and the total amount of resources requested by pods in a namespace. Quotas will enable cluster administrators to set and enforce resource limits for namespaces/projects in a shared cluster environment. In OpenShift Online, Red Hat’s Public PaaS offering, we use similar capabilities to give users capacity to run applications in our free and paid tiers. In OpenShift Enterprise, Red Hat’s on-premise and Private PaaS software solution, our customers will be able to use this capability to allocate capacity on their own PaaS and implement internal chargeback and showback models.

4. Authentication & Authorization

The Kubernetes master uses tokens or client certificates to authenticate users for API calls to the apiserver. Authorization in Kubernetes is a separate step from authentication and compares attributes of the context of the request with access policies. These capabilities are important to provide secure, roles-based access to the platform. Red Hat’s Jordan Liggit and David Eads have done extensive work on authentication and authorization plugins as we build out enterprise capabilities in Kubernetes and integrate them into OpenShift 3. This will enable Red Hat and our customer administrators to configure access and authorization based on their own policies, integrate these capabilities with enterprise authentication and authorization systems, and enable single sign-on with other solutions like enterprise service catalogs.

5. Networking

Container networking is one of the most exciting areas of innovation in both the Kubernetes and Docker communities. One of the most powerful concepts in Kubernetes is how it implements container networking, which differs from Docker’s defaults. A pod is the unit of scheduling in Kubernetes and each pod can run one or more containers that are deployed as an atomic unit on the same host. Each pod has its own IP address, which simplifies networking of containers across multiple hosts. To leverage this capability, you must set up your cluster networking correctly. The Google Cloud Platform provides advanced networking capabilities that handle all of this for you. Red Hat has has worked with Google and others in the Kubernetes community to enable networking solutions for enterprise data centers, public and private clouds so you can run Kubernetes anywhere. For example, Rajat Chopra and Mrunal Patel developed an OpenVSwitch solution for Kubernetes that will be enabled out-of-the-box in OpenShift 3. This was demonstrated in a recent OpenShift Commons briefing on networking and OpenShift 3. Eric Paris, Jeremy Eder and other Red Hatters (Dan Williams, Dan Winship, John Linville, Neil Horman, Thomas Haller and more) have also contributed extensively to our container networking efforts. Red Hat has also collaborated with CoreOS on Flannel overlay networking with Kubernetes, which is now supported in Red Hat Enterprise Linux Atomic Host.

6. Networking Plugins

Red Hat has also worked with the Kubernetes community on implementing a proposal to decouple networking to facilitate multi-tenant networking segmentation and creation of plugins for any desired networking solution. Networking segmentation is critical in a multi-tenant environment to isolate different user applications and different environments (i.e. Dev vs. Prod) in a shared cluster environment. Networking plugins will open up Kubernetes to any 3rd party networking solution provider. Networking vendors like Cisco, Juniper, Nuage and more have already been active in this area. Red Hat is actively engaging with these and other partners and has also initiated work on Kubernetes networking integration with OpenStack Neutron.

7. Storage Volumes

A pod in Kubernetes specifies storage volumes for mapping persistent storage to containers. This enables users to run stateful services inside their containers. Kubernetes supports multiple types of storage volumes. Mark Turansky, Chris Alfonso, Liang Xia, Steve Watt, Brad Childs, Huamin Chen and others at Red Hat have been working to integrate enterprise storage options like NFS with Kubernetes and this will soon grow to include additional options like iSCSI, Gluster, Ceph, OpenStack Cinder and more. Mark did a recent OpenShift Commons briefing on storage in OpenShift 3 which discussed this as well as the planned interaction model in OpenShift, between storage administrators who set up storage and developers who need to add storage to their applications.

8. Scheduler

The Kubernetes scheduler, which is a component of the Kubernetes master instances, is responsible for scheduling new pods onto selected Kubernetes nodes within a cluster, based on configured policies. Red Hat’s Abhishek Gupta has focused on this area and developed location-aware scheduling capabilities in OpenShift that enables administrators to control pod placement across a set of nodes assigned to specific regions and availability zones. This feature was recently demonstrated in an OpenShift Commons briefing on scheduling pods for high availability. The Kubernetes scheduler is also pluggable, opening the door for integration with 3rd party cluster management & scheduling solutions like Mesos.

9. Routing

The OpenShift routing infrastructure provide external DNS mapping and load balancing to route external requests to Kubernetes pods. This feature, developed by Rajat, Paul Weil, and Ram Ranganathan allows application end users to access application services, deployed across a cluster of Kubernetes nodes. The router leverages information in Kubernetes' etcd to update routes as new Pods are created and supports multiple protocols including HTTP, HTTPS, WebSockets, and TLS with SNI. The default OpenShift 3 router is based on HAProxy and itself runs in a pod on the cluster. It can be configured for high availability by running multiple instances of the router pod and fronting them with a balancing tier. The OpenShift router can also be replaced by a customer’s own routing or load balancing infrastructure.

10. Installation

Any user can get started with Kubernetes by downloading a pre-built binary release or building your own from source. OpenShift also provides a convenient installer that includes Kubernetes and additional OpenShift components. Red Hatters Jason DeTiberus and Jhon Honce have developed an Ansible based installer for OpenShift installation and configuration and are hoping to engage the community to extend this to Puppet, Chef and more. Eric Paris also contributed a Kubernetes Ansible playbook to build a Kubernetes cluster directly. Users can also build OpenShift from source by accessing the OpenShift Origin Github repository and contribute to our next generation application platform.

More Than Containers

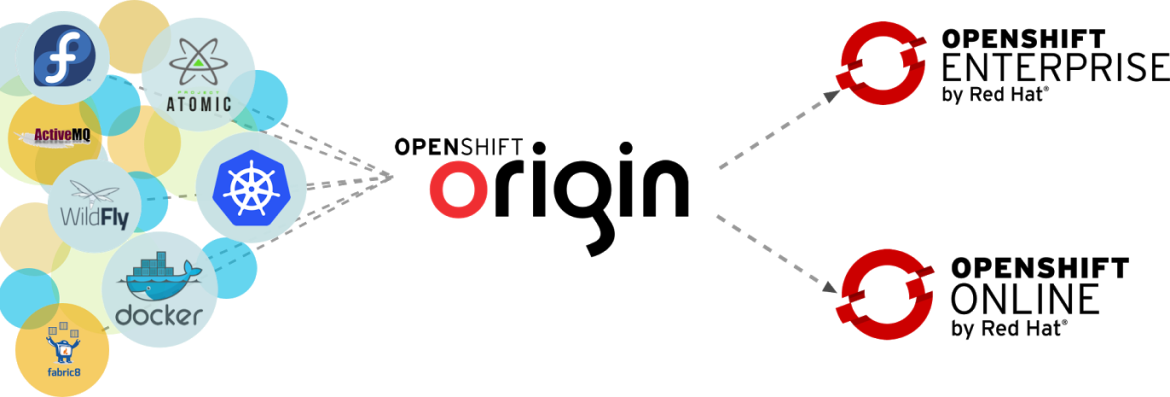

The capabilities described here all build on the Kubernetes project to create a powerful core container infrastructure and also provide the foundation for OpenShift 3. These features would not be possible without contributions from Google and other Kubernetes community members. While this blog covered a number of features that Red Hat was focused on and recognized key contributors, there are undoubtedly many features and contributors that I missed. The best way to experience these features is to try them out for yourself, by installing Kubernetes directly or installing OpenShift Origin - which is the upstream for Red Hat’s OpenShift Enterprise and OpenShift Online commercial offerings. We also invite users to join us in the Kubernetes and OpenShift Origin communities as we continue to build out the next great application platform.

About the author

Browse by channel

Automation

The latest on IT automation that spans tech, teams, and environments

Artificial intelligence

Explore the platforms and partners building a faster path for AI

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

Explore how we reduce risks across environments and technologies

Edge computing

Updates on the solutions that simplify infrastructure at the edge

Infrastructure

Stay up to date on the world’s leading enterprise Linux platform

Applications

The latest on our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Developer resources

- Customer support

- Red Hat value calculator

- Red Hat Ecosystem Catalog

- Find a partner

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit